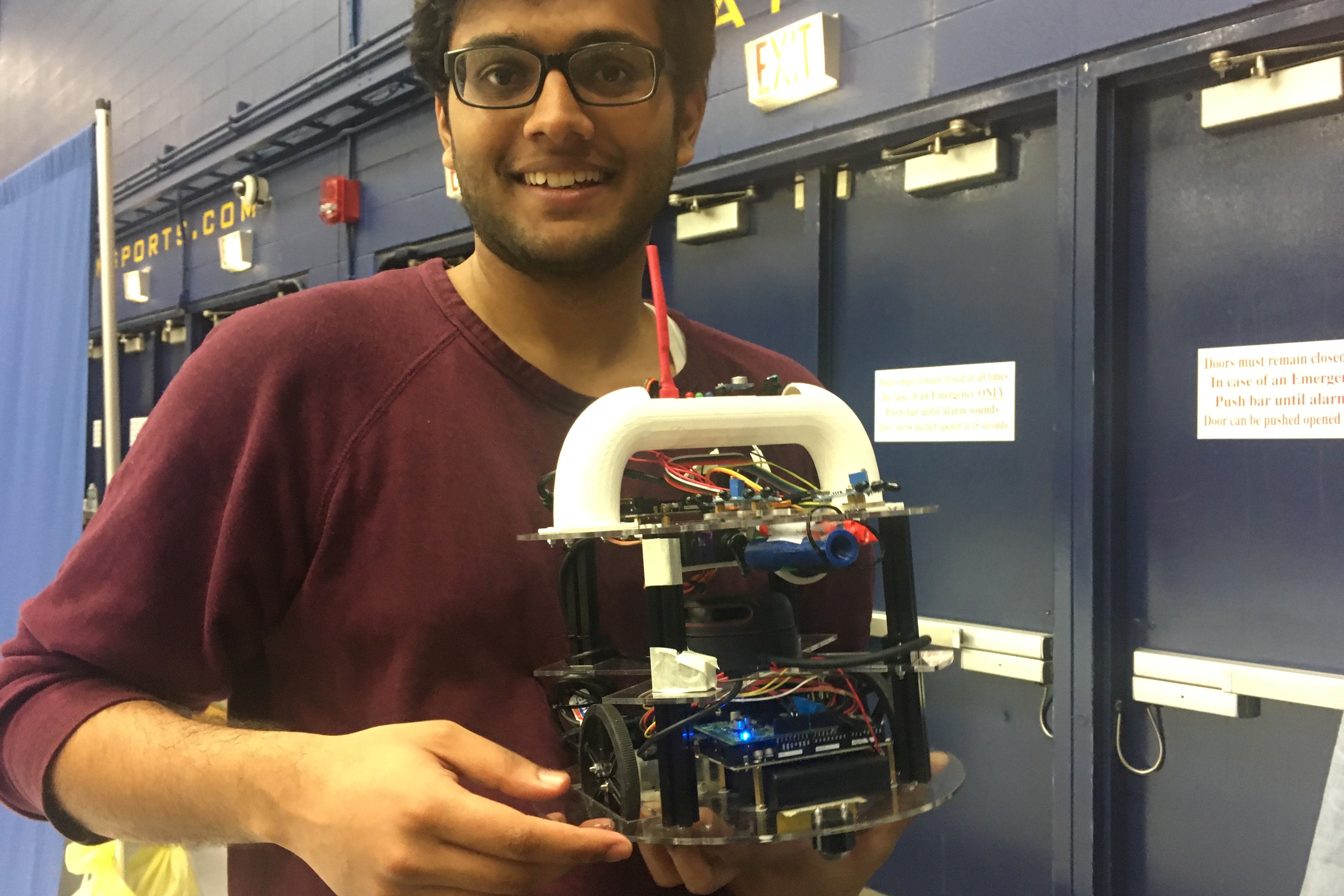

Robotic Sheepherding

2022

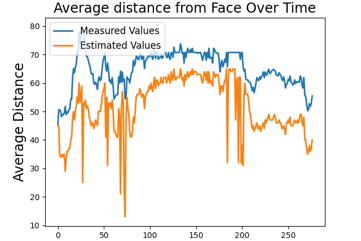

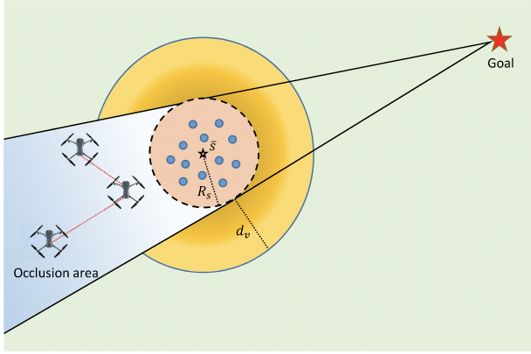

In this project, I designed and implemented an algorithm for a robotic simulation of sheep herding using the Misty Robot and SLAM image mapping. We used OpenCV color tracking and a Kalman Filter to estimate the position of herded flock and generate control vectors to herd the flock with a Misty robot similar to how a sheepdog would.

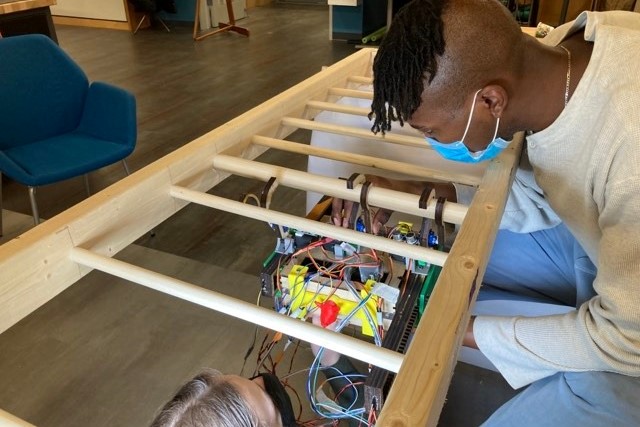

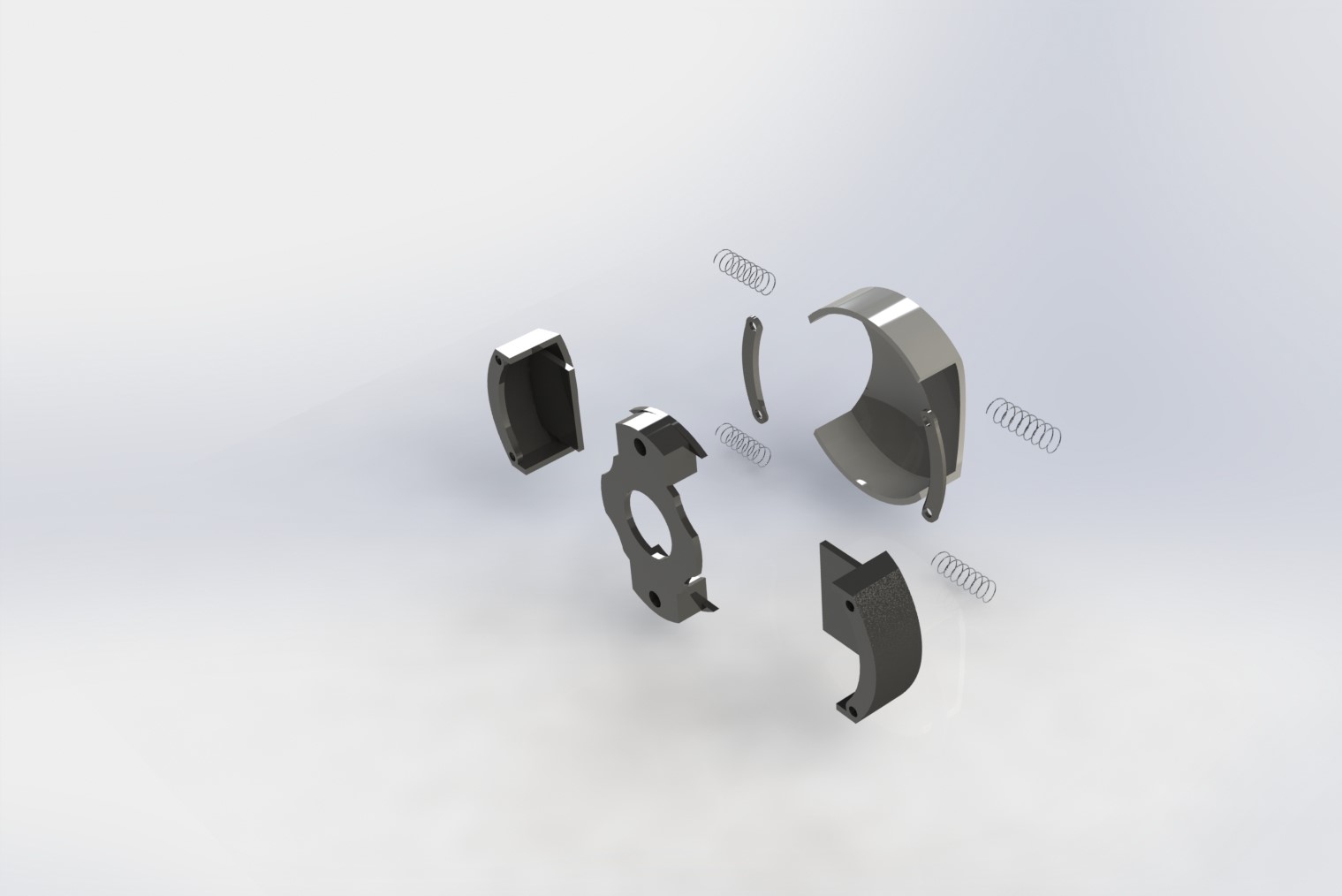

Leg Locker

2022

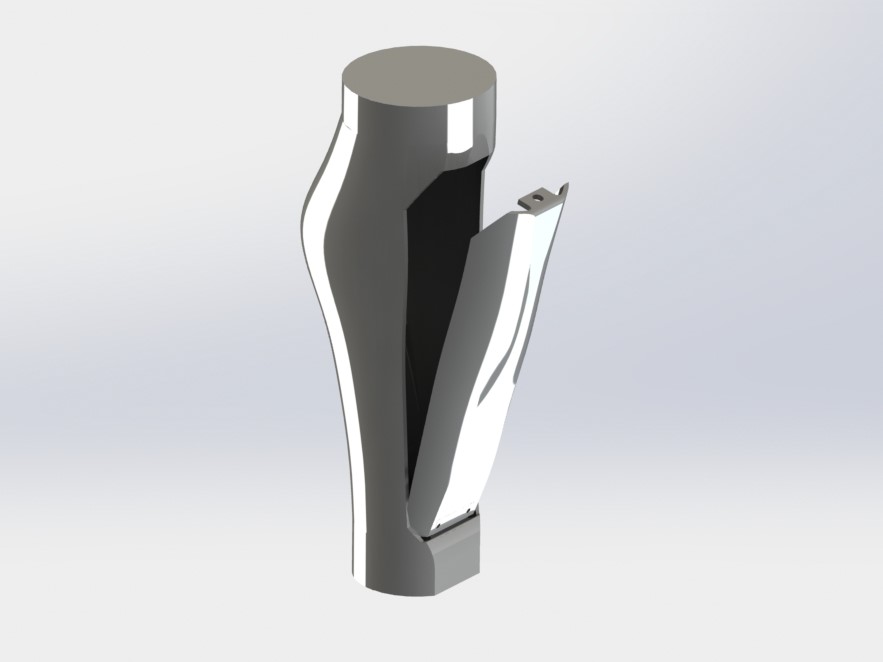

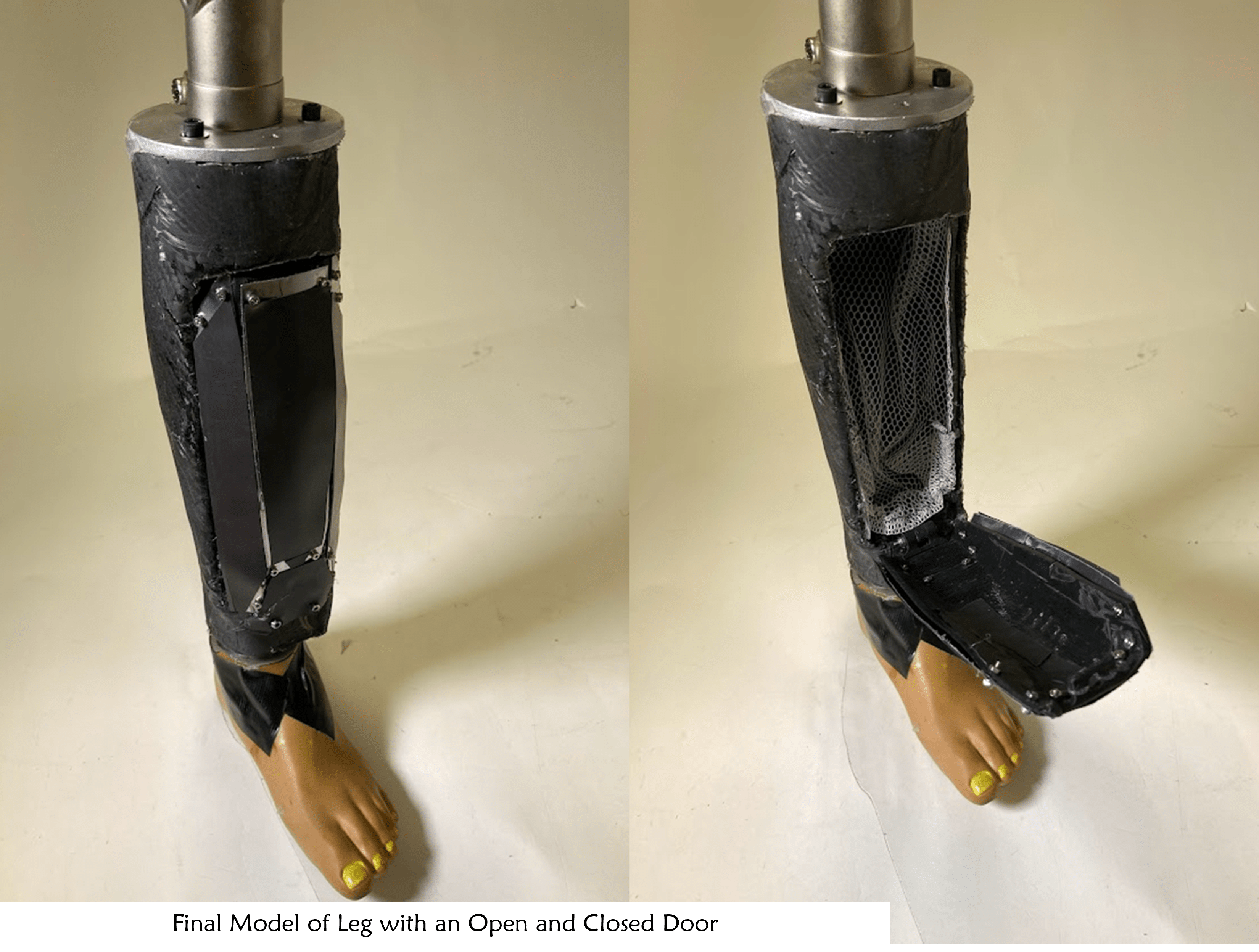

Leg Locker is a project originally conceptualized by our classmate and paralympic swimmer, David Gelfald and our classmate Jeffrey Blitt David wears a lower limb prosthetic on his left leg and noticed that there is a lot of unused space within the leg. Being able to store food, liquid, or small objects within that space would add extra utlity to an already useful device for David. He suggested turning this concept into our final project for senior design.

Viennese Waltzing Robot

2021

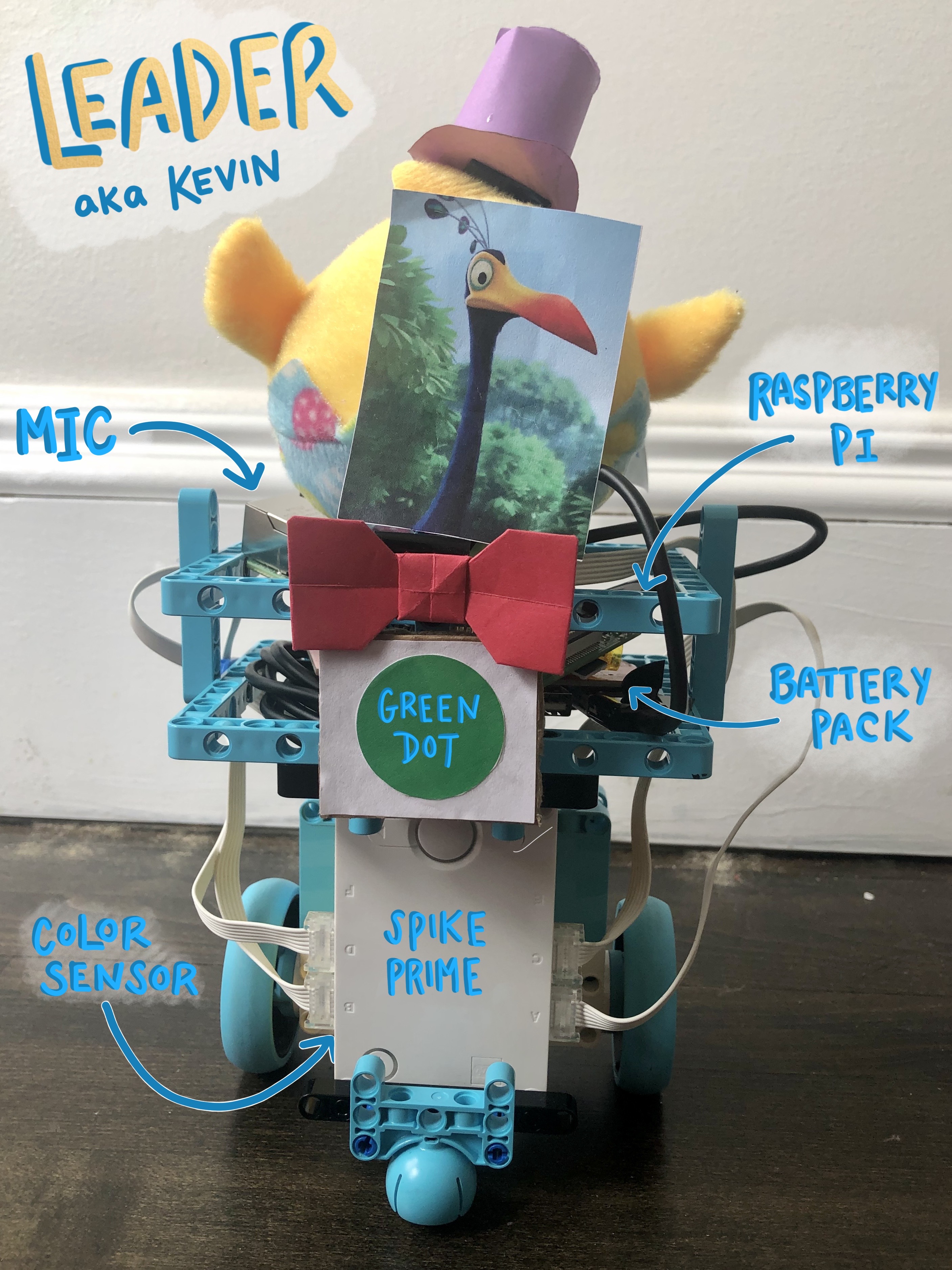

Using SPIKE LEGO Robotics kits, I worked with two partners to design a system of robots to “dance” based on the Viennese Waltz. Our trio of three separate robots followed an counterclockwise outer circle alongside other robot dancing “partners” while continuously spinning in smaller loops similar to the rotary style of the waltz. Our primary system consisted of a “leader” and “follower” robot, where the dance “leader” switched out mid dance with an alternative “leader robot.” We based the design of our robots on characters from the Disney movie “Up.”

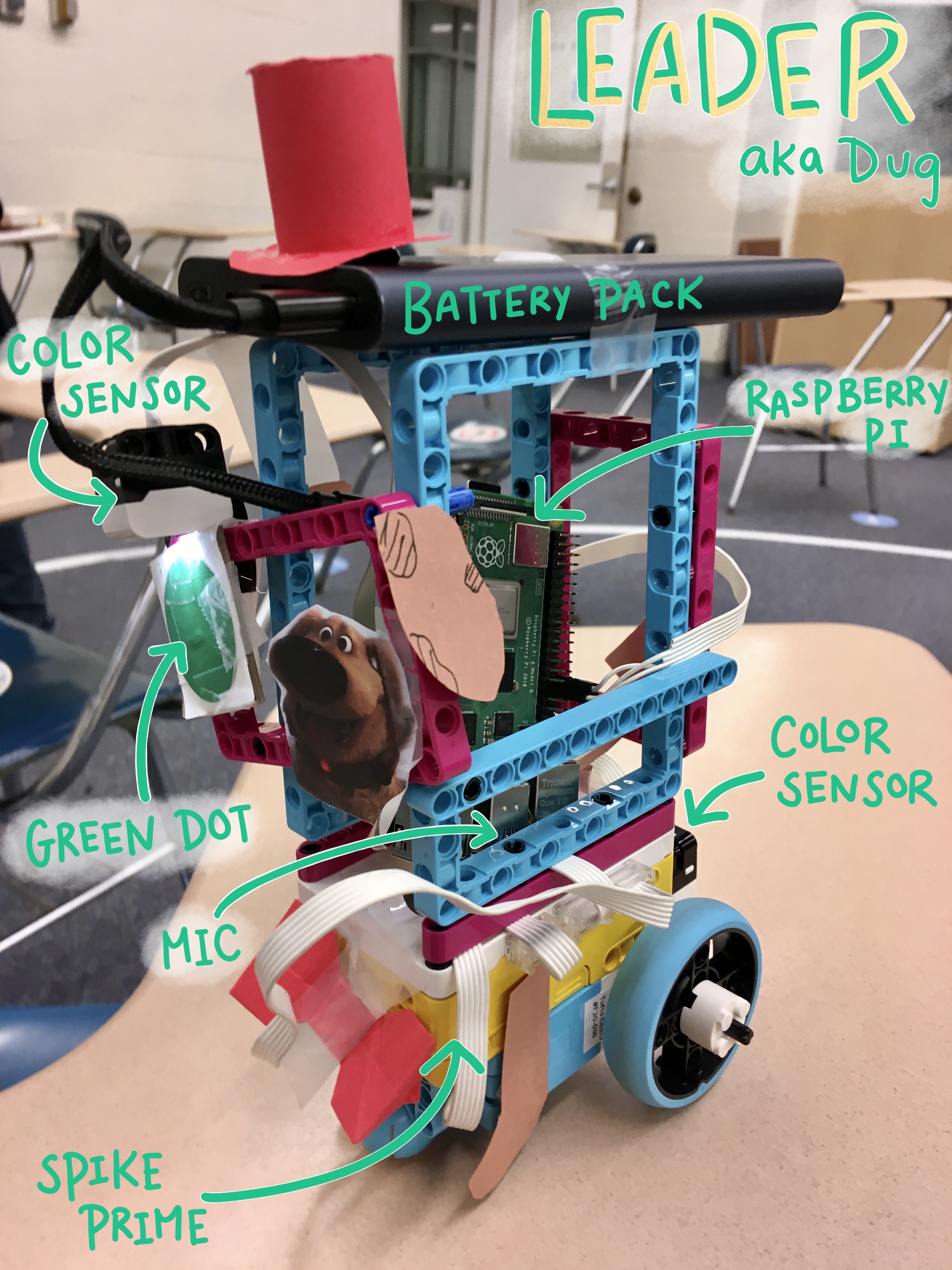

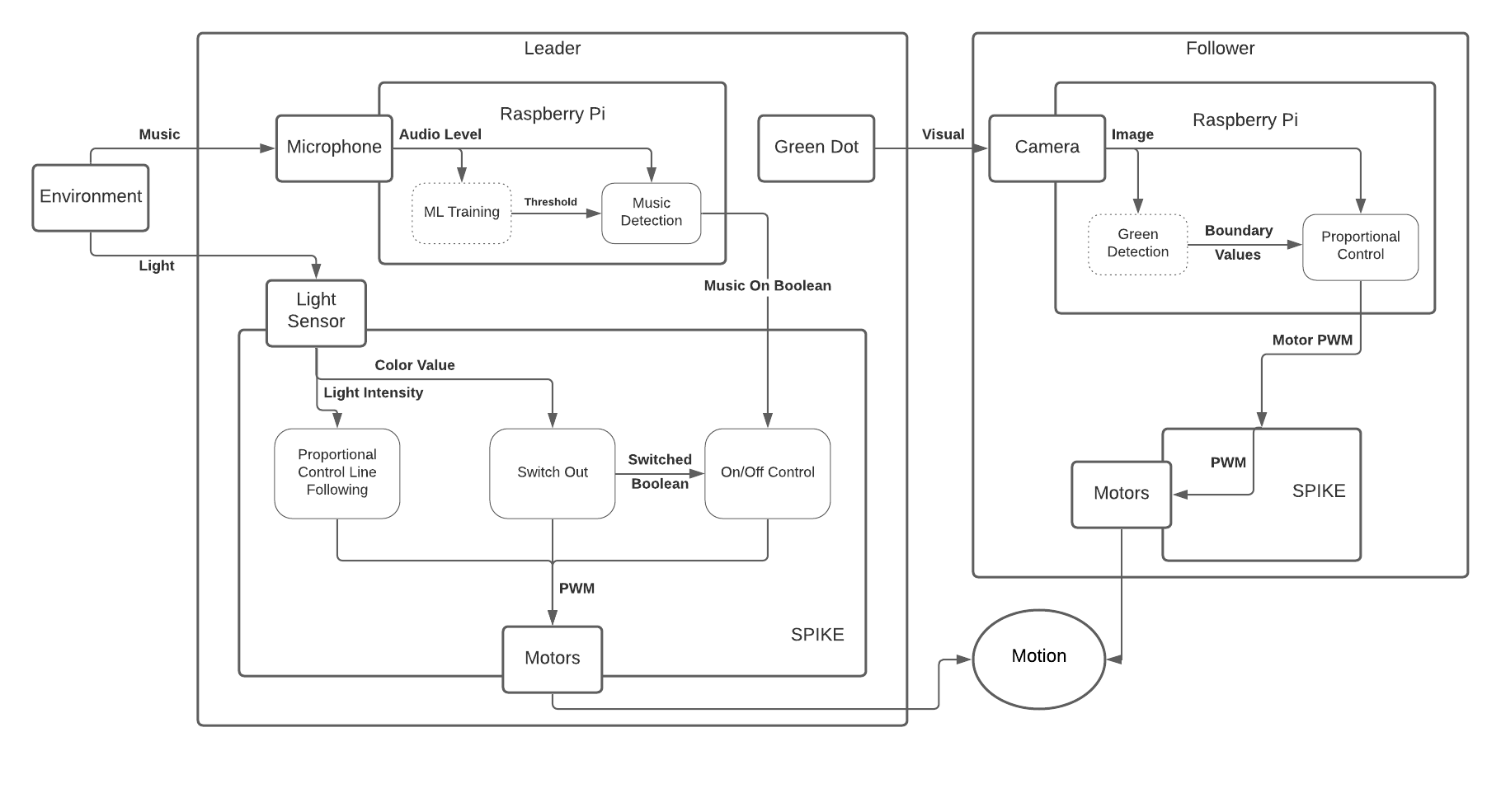

In our three part system, both “Leader” robots are constructed from a SPIKE Prime attached to a Raspberry Pi powered by a Battery PA. The Leader receives input from a microphone to detect music. It uses a color sensor to detect its place on a line and outputs proportional control values to its two motors. A green dot is attached to the leader for the follower’s color tracking.

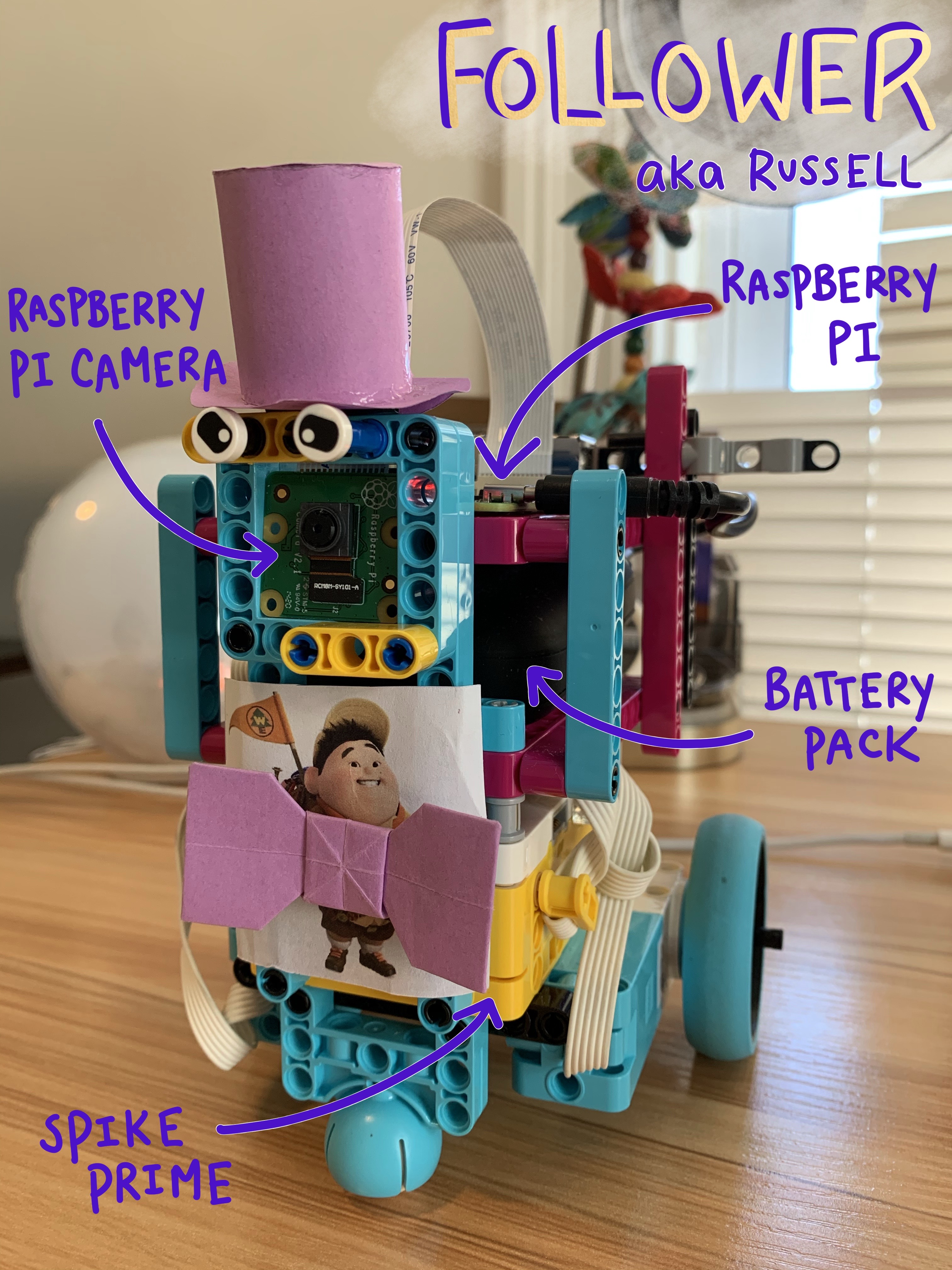

Like the Leader,” the “Follower” robot is constructed from a SPIKE Prime and a Battery Pack powered Raspberry Pi 4. The Follower receives input from a Raspberry Pi cv2 camera attached to the front of the robot.It uses image processing to track the green dot on the “Leader” robot. Output speed and direction changes are then sent to the SPIKE’s motors.

To maintain a “dance” between the Waltzing Robots, we based the “follower” robot’s movement on tracking a green dot on the “leader robot.” To import a physical image, we used an opencsv camera with SPIKE Prime to identify a green ball shape with an image processing mask, dilations, erosions. In our streamed video, we trace a yellow circle with a centered red dot with dimension coordinates that can be returned to the proportional controller. In our initial trails, we used face tracking instead of color tracking for our follower robot, however we switched to a color tracking system to decrease the follower robot’s processing time.

Line Following

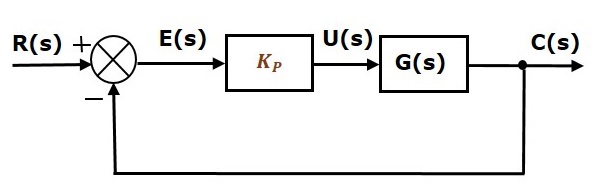

To navigate the outer waltz circle, we use a proportional control system with color tracking along a line. For this line following task, I identified an ideal threshold value as the average of “online” and “offline” color measurement so the “leader” would follow along the edge of the line.

Error is measured relative to the difference between this “threshold” value and the actual sensor value and modulated by proportionality constant “Kp” to control for environmental factors. The resulting speed of each wheel motor is then modulated by error to keep the “Leader” following along a line.

Leader Switch Out

To maintain a “dance” between the Waltzing Robots, we based the “follower” robot’s movement on tracking a green dot on the “leader robot.” To import a physical image, we used an opencsv camera with SPIKE Prime to identify a green ball shape with an image processing mask, dilations, erosions. In our streamed video, we trace a yellow circle with a centered red dot with dimension coordinates that can be returned to the proportional controller. In our initial trails, we used face tracking instead of color tracking for our follower robot, however we switched to a color tracking system to decrease the follower robot’s processing time.

For the movement of the follower, we used a proportional controller to keep the green dot at a constant size and radius. The speed of the followers movement is dependent on the measured size and position of the dot so that the follower can speed up to catch up if it loses track of the dot and turn to stay in line with the leader robot as it spins throughout the date.

Color Tracking

Although a waltz traditionally has two dancers, we had a three robot system and wanted to let all three robots “participate” in the dance. We used the SPIKE Prime color sensor to detect when the “Leader” robot runs over “red.” Upon sensing “red,” the leader robots will switch out with one another.

Music Detection

We used Pyaudio and a 1D linear classifier to identify variations the start and stop of waltz music using a microphone connected to Raspberry Pi 4. We then sent indicator values to the SPIKE to start and stop over serial. While we were able to achieve some success with this method, we struggled to gather a dataset that could successfully differentiate between ambient noise and music since the Waltz music alternated between high and low output values throughout the orchestration.

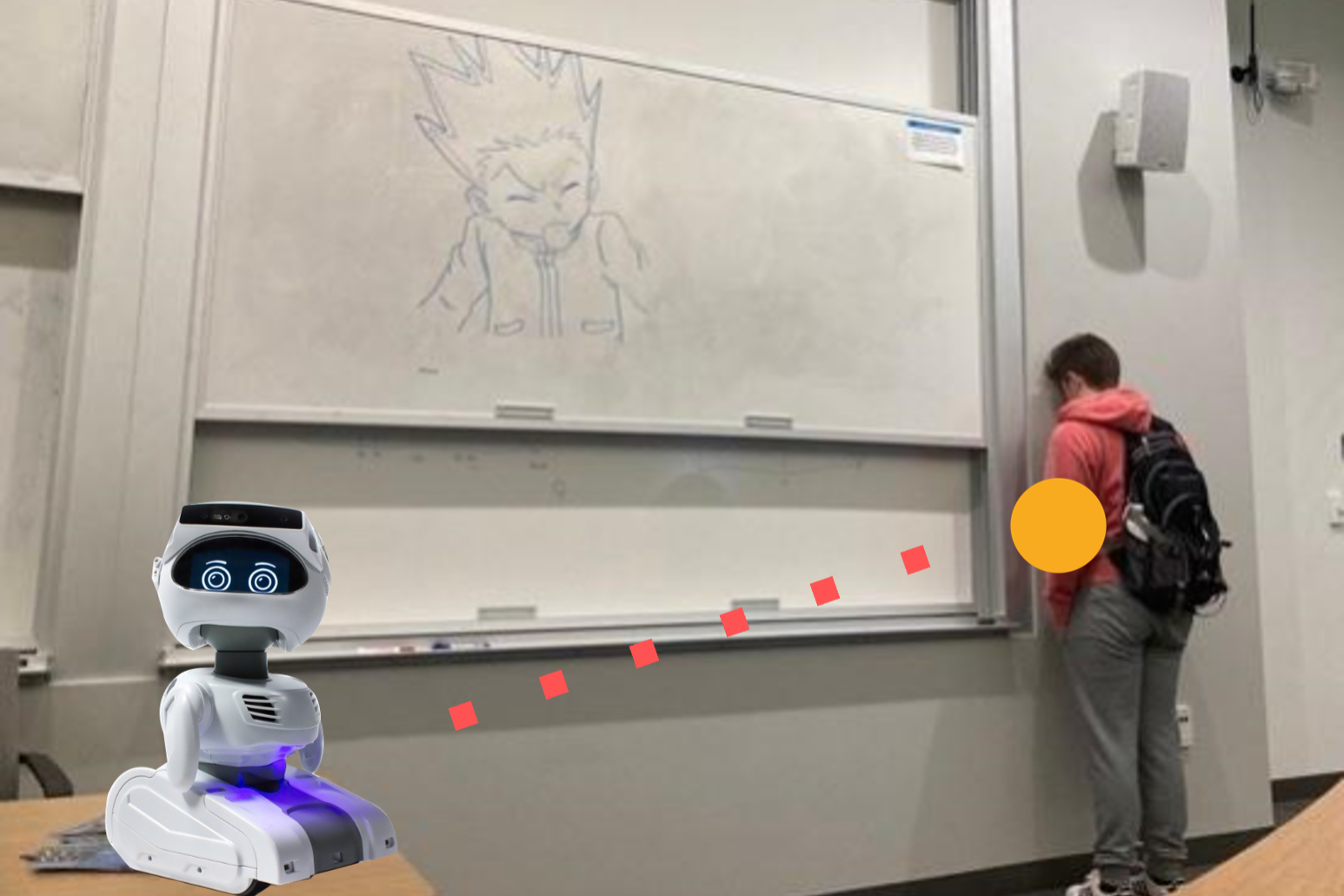

Human Robot Interaction

2021

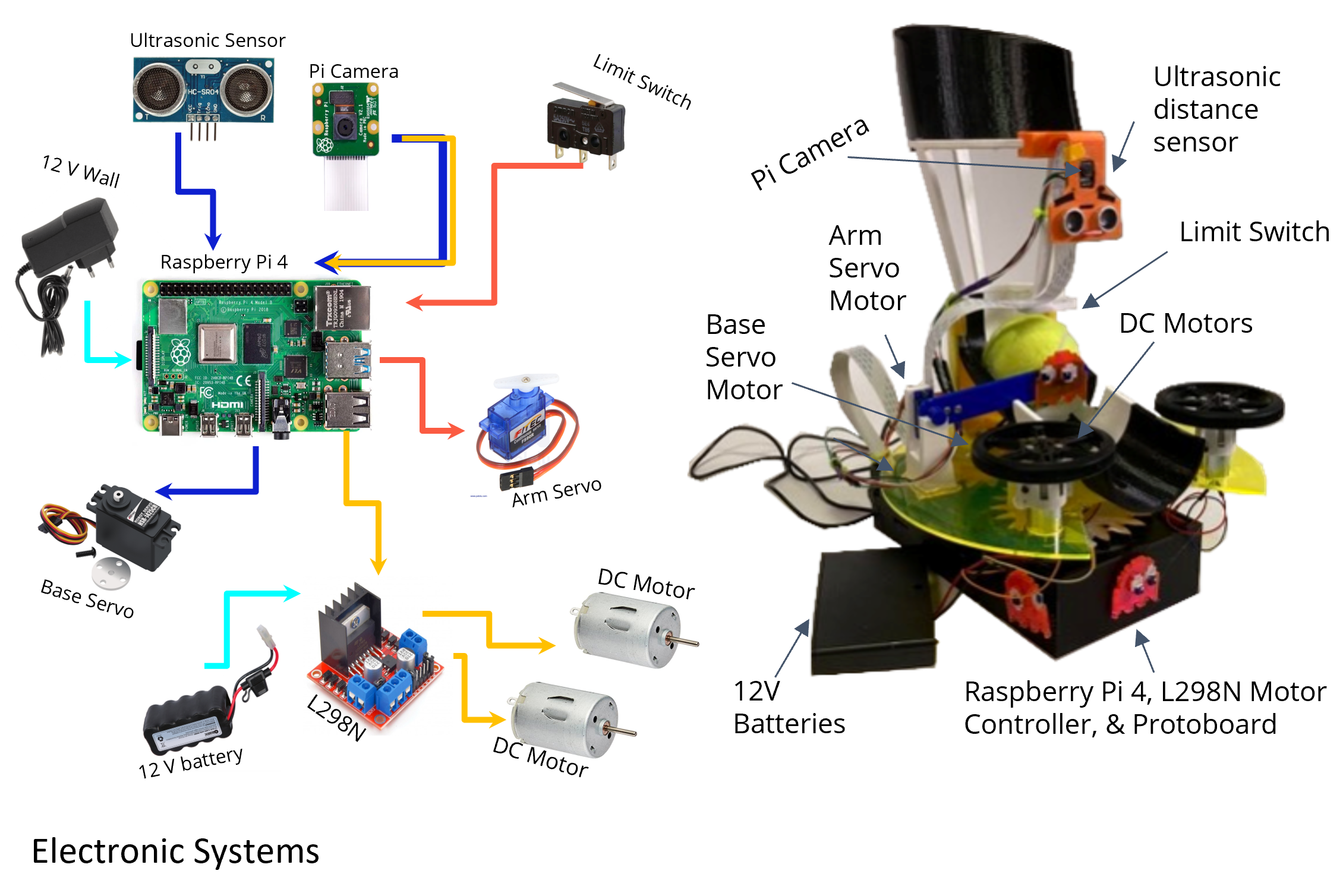

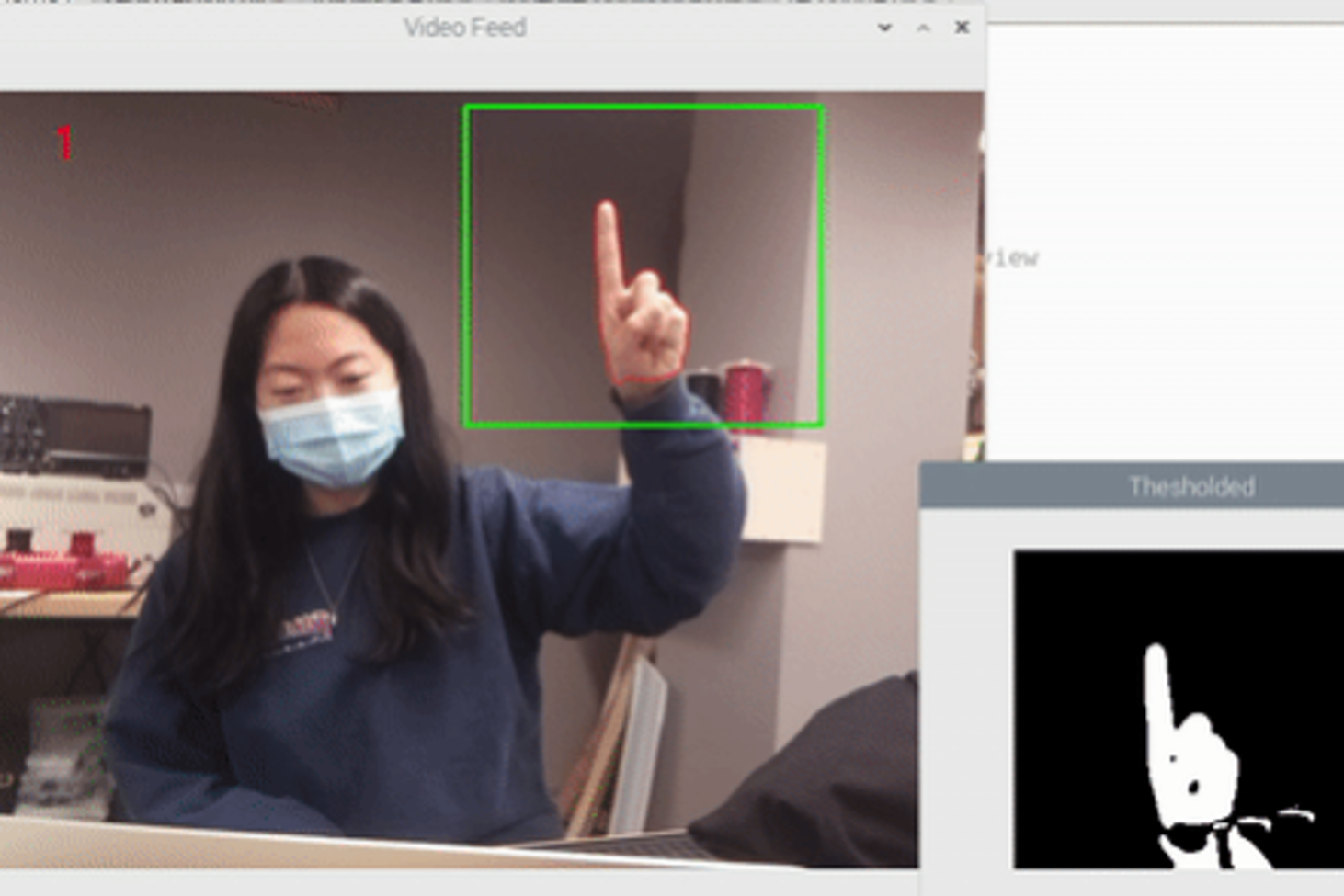

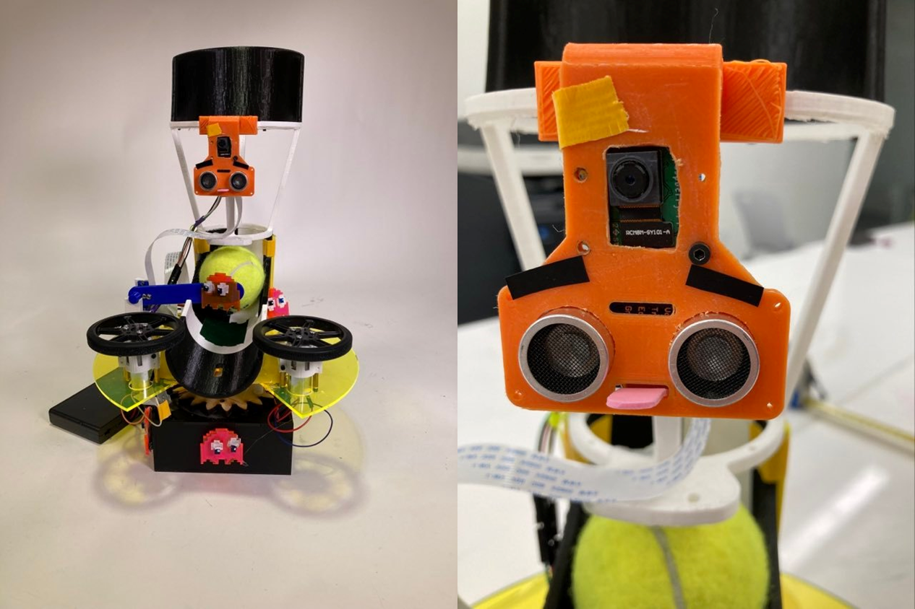

This robot uses OpenCV finger detection to identify and play catch with users. In each game, the robot will sweep to identify how many users are in range and then shoot a tennis ball and and receive the from each user when they indicate they are ready with their fingers.

Balancing Robot

2021

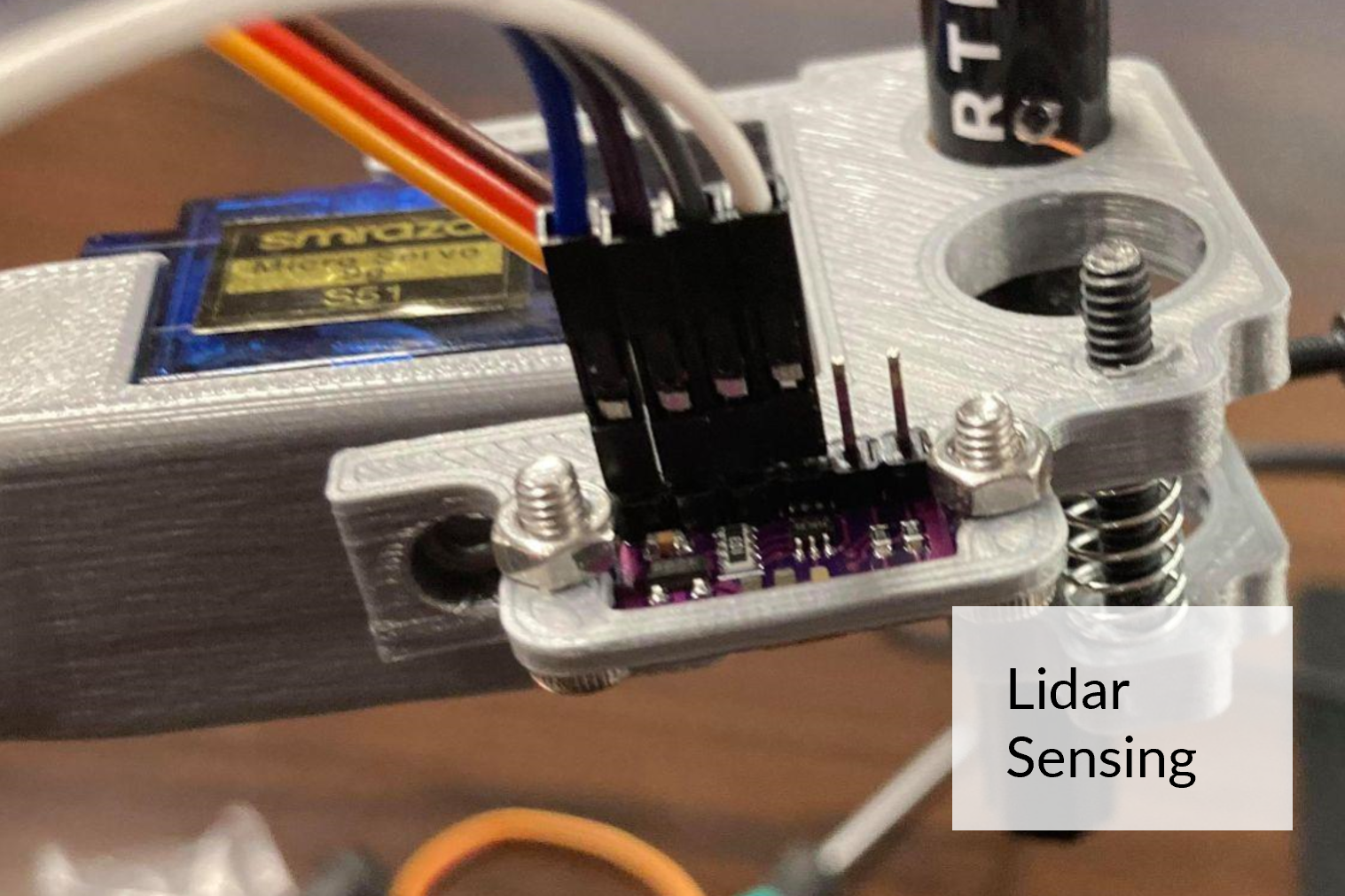

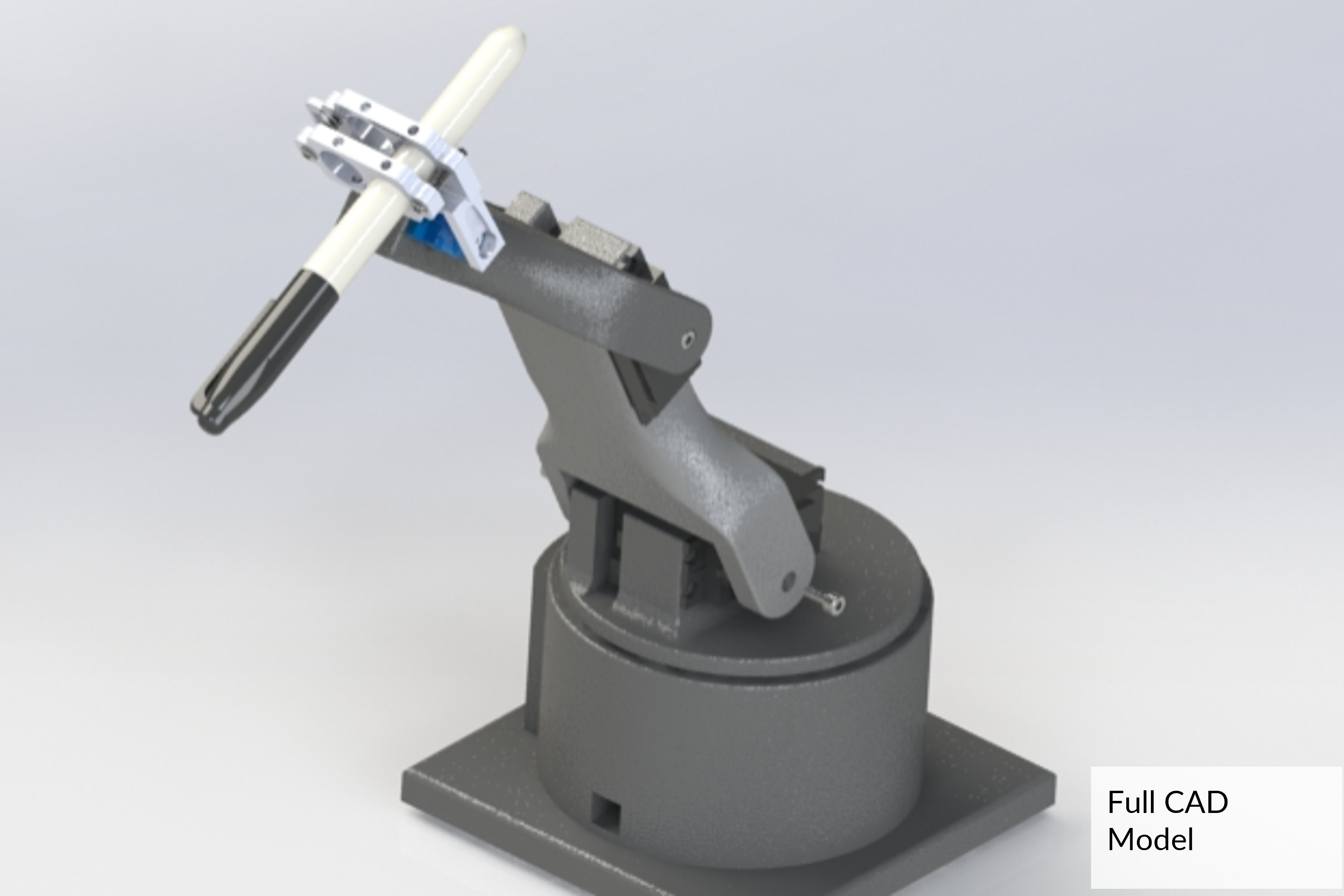

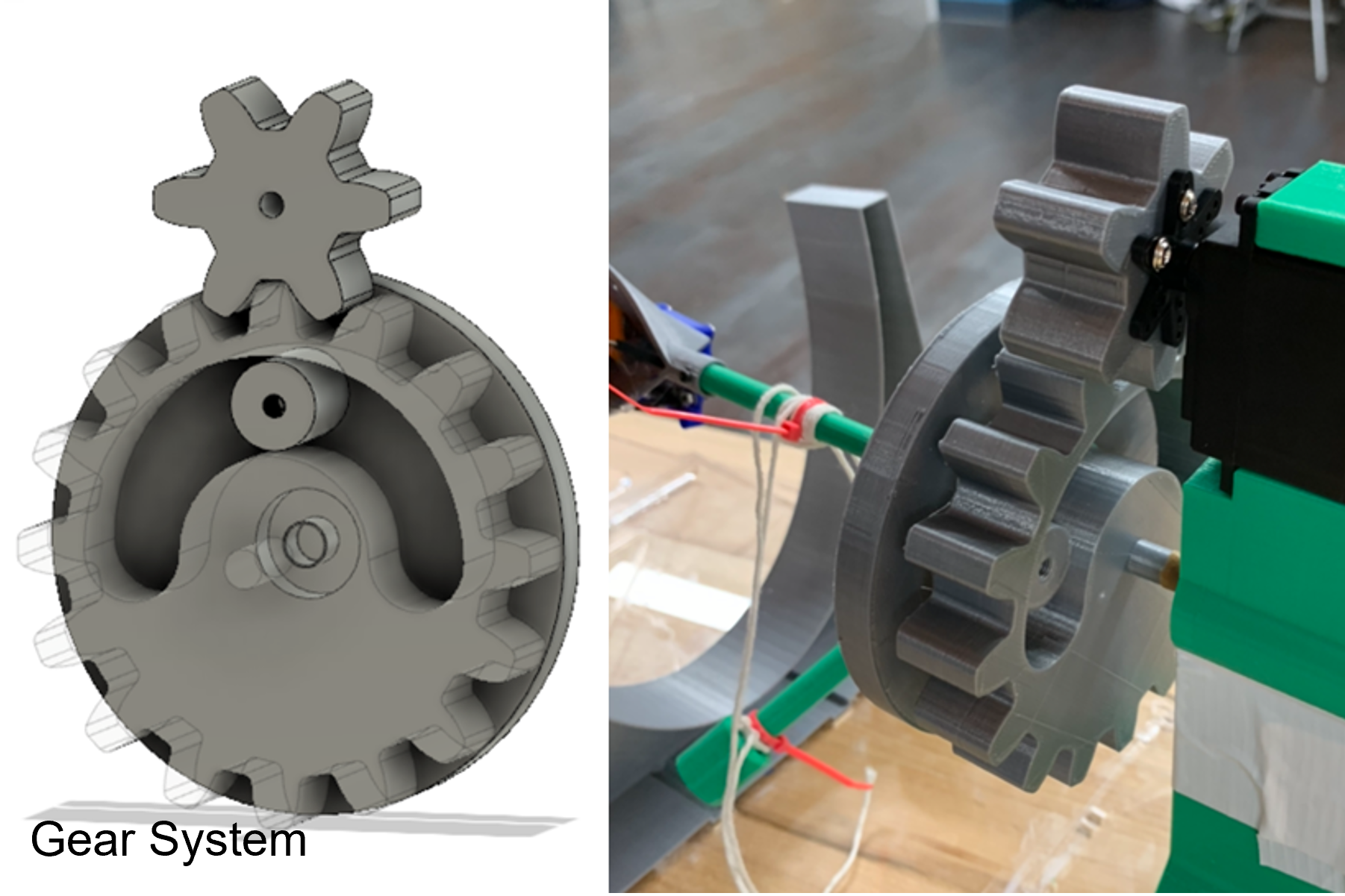

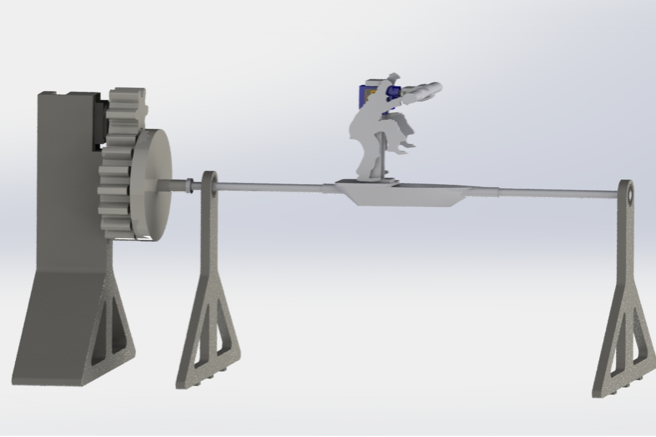

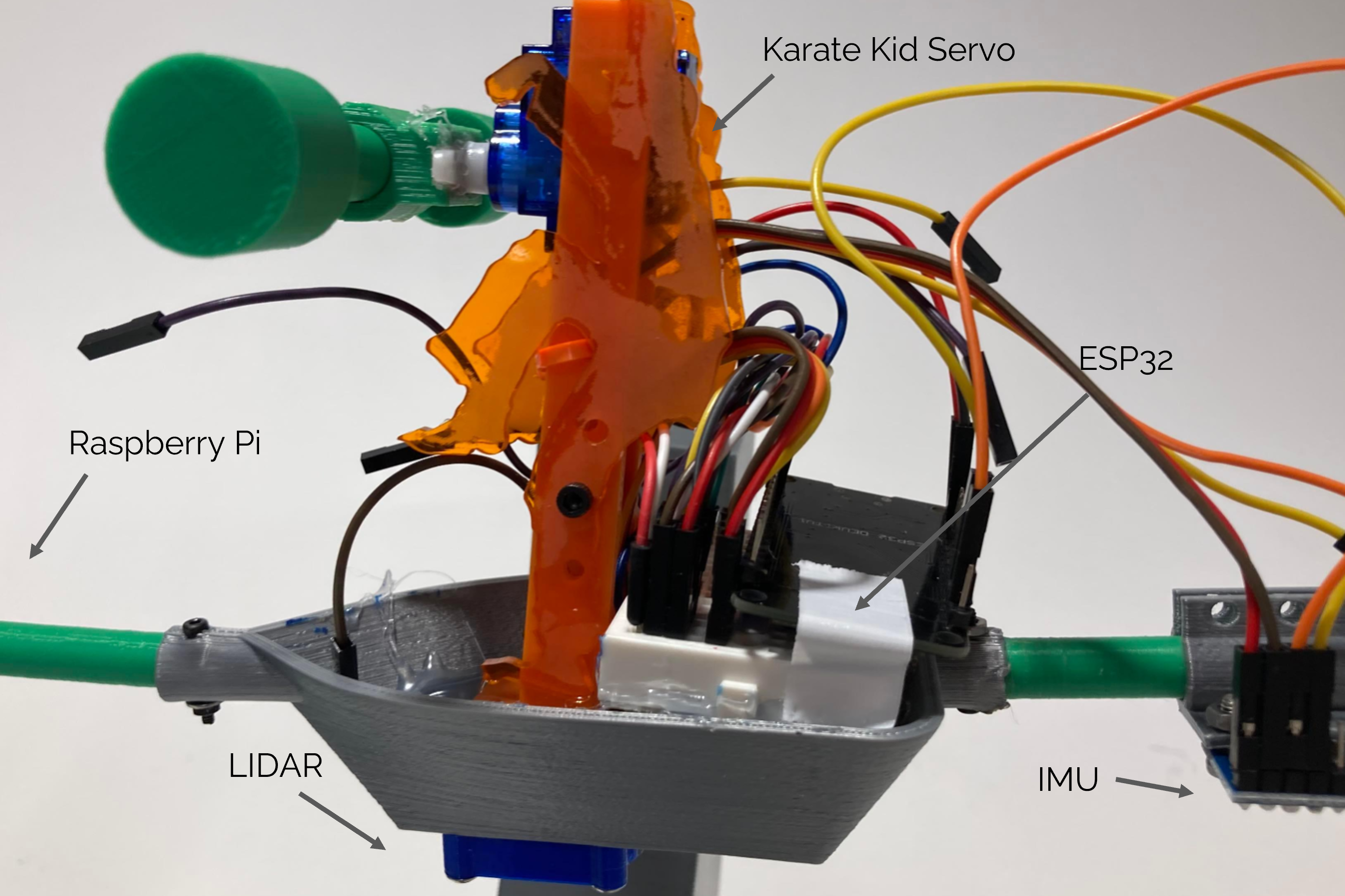

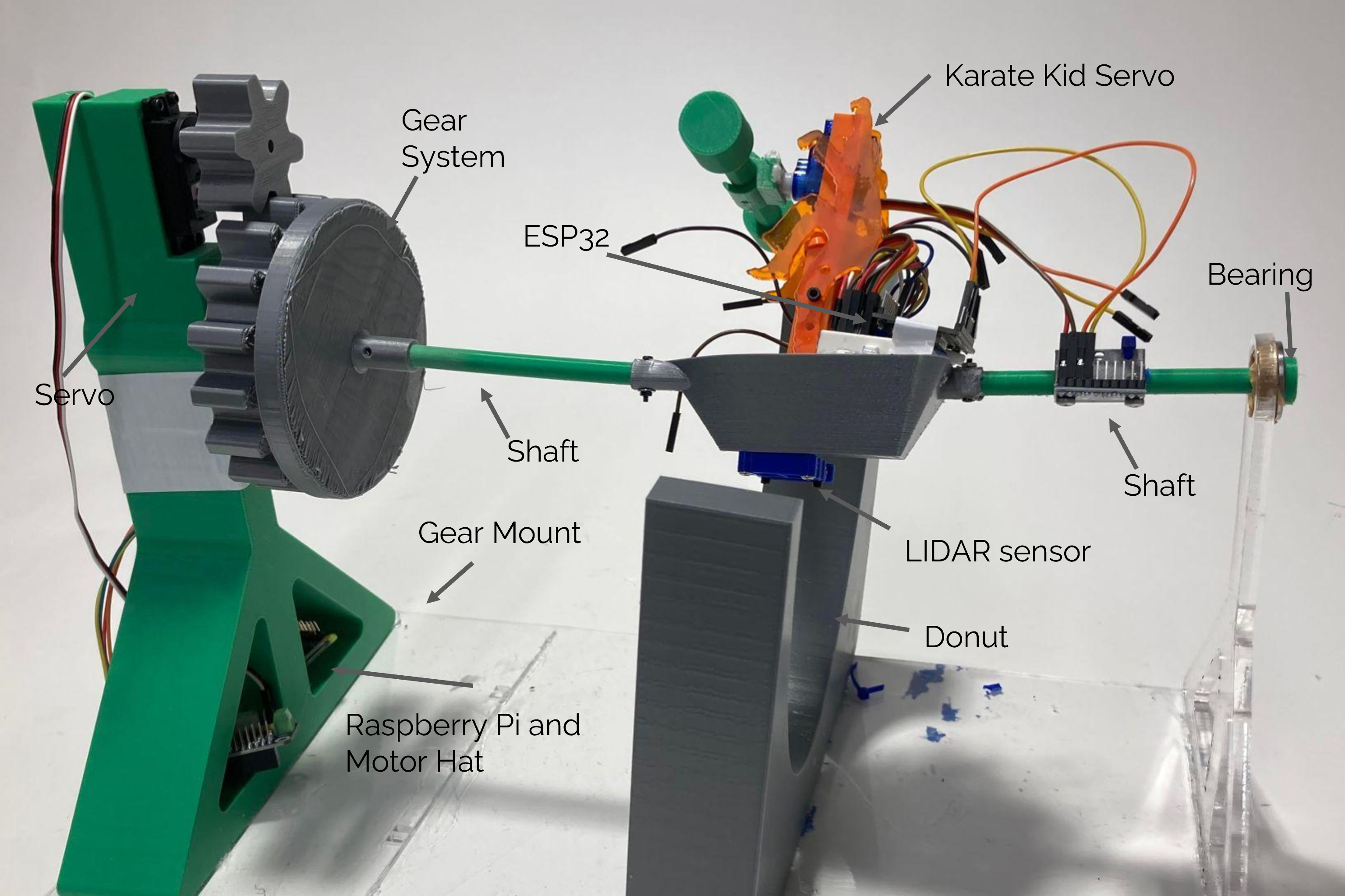

This balancing robot uses an IMU and LIDAR to sense the position of a figure and to keep the figure upright through the movement of a “balancing beam” mounted on the figure. A Gear System is used to reset the system while allowing the figure to fall 90 degrees to either side if it loses balance.

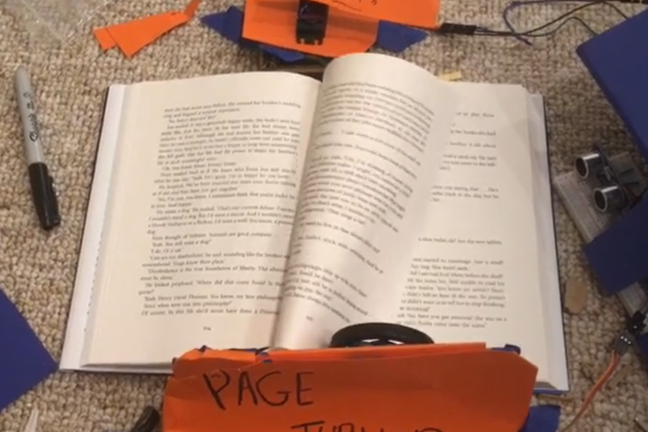

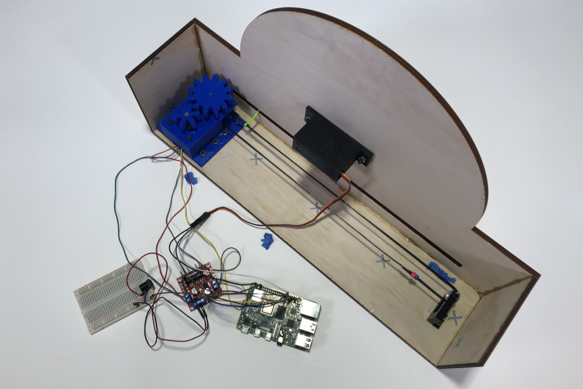

Assistive Page Turning Robot

2021

Turning a page requires gripping the thin space between one page and the next, separating pages, and then having the arm control to turn a page over without ripping it. For someone with poor figer or joint dexterity, this task could be incredibly frustrating. I built a robotic page turner with gesture activated control to decrease the dexterity needed to turn a page and to make this task more accessible.

Assistive Door Stopper

2021

Turning a page requires gripping the thin space between one page and the next, separating pages, and then having the arm control to turn a page over without ripping it. For someone with poor figer or joint dexterity, this task could be incredibly frustrating. I built a robotic page turner with gesture activated control to decrease the dexterity needed to turn a page and to make this task more accessible.

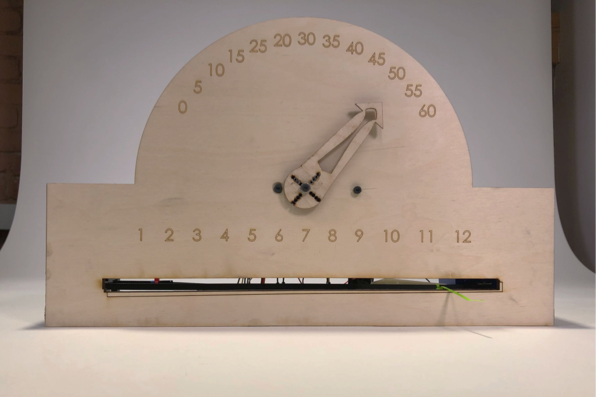

24-Hr Analog Clock Robot

2021

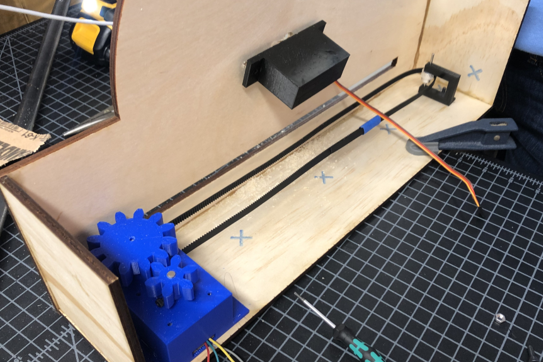

In this analog clock with two time indicators. The upper, servo-driven, indicator shows the minute, while the lower, stepper-driven, indicator shows the hour. We use a gearbox so that the stepper it can move a belt attached to the indicator. In our code, we translate the time value of the “datetime” function into “steps” to increment the motors.

Public Art Display

2020

I created a public art display with color changing LEDs and spinning disco balls that reacts to the motions of users moving through an arch. The display uses Raspberry Pi 4 servers and clients to communicate over the internet.

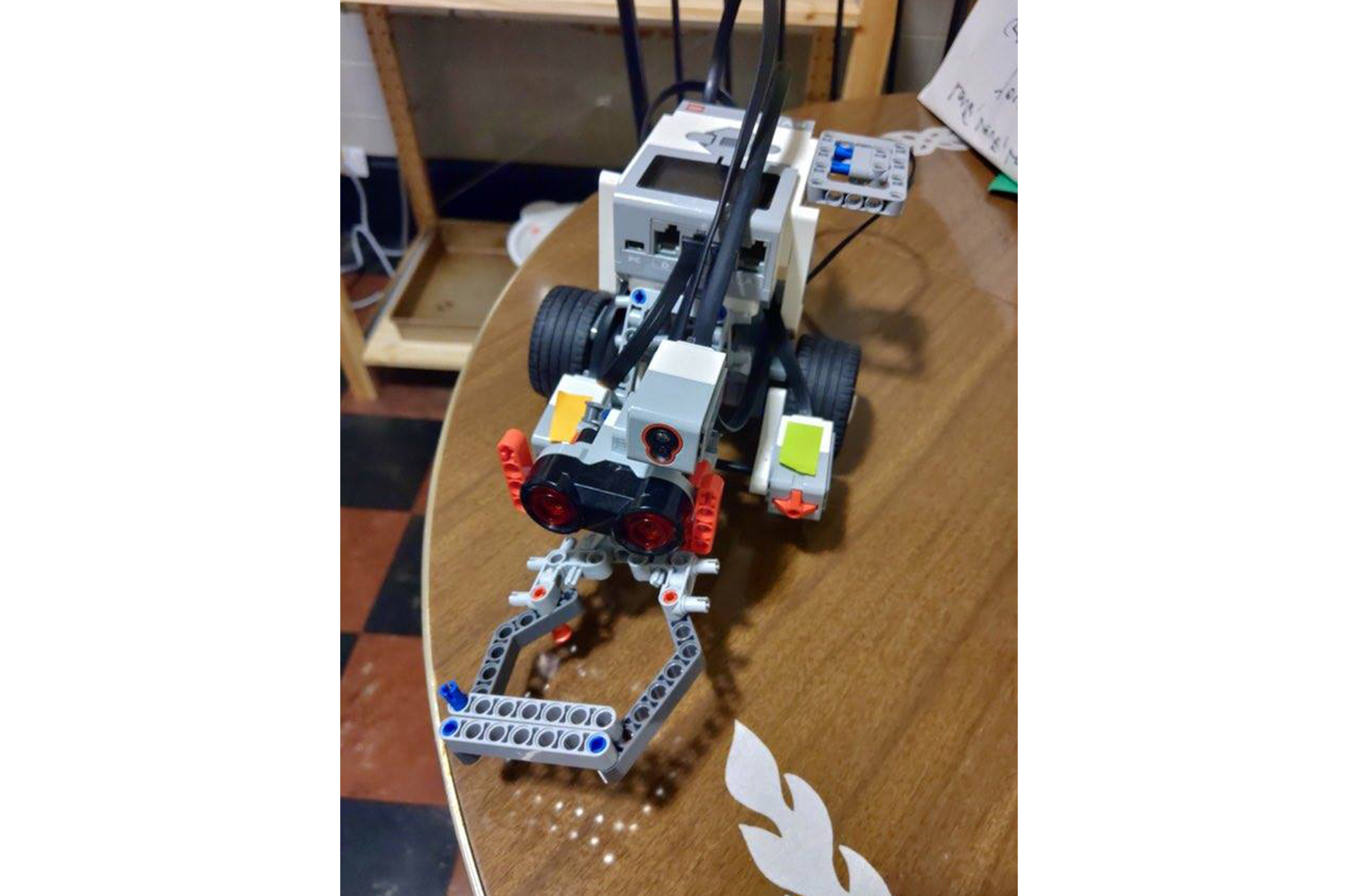

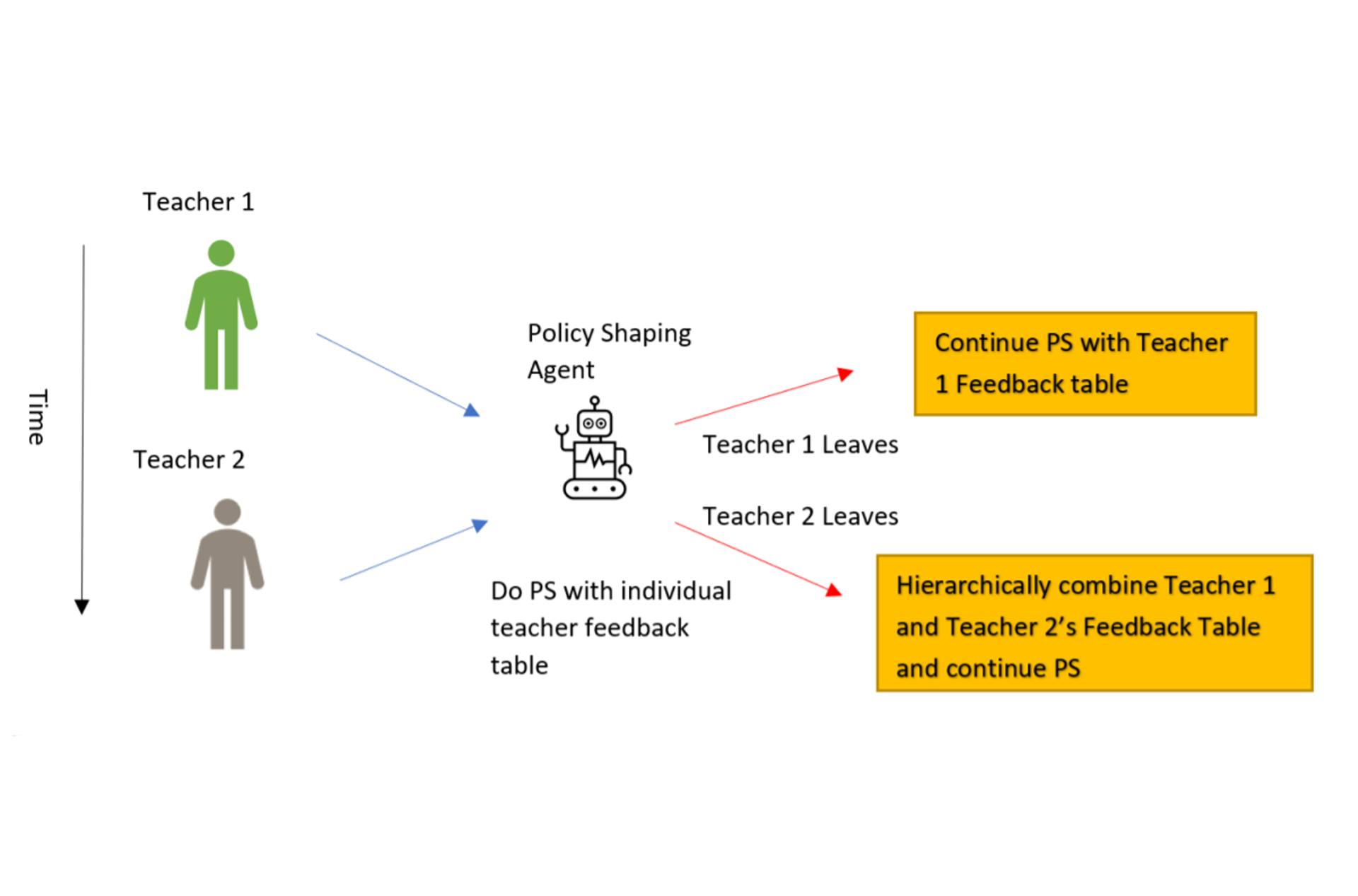

CHORE robot

2020

I conducted a premilinary study on different policy shaping algorithms for cleaning robots adapting to different household social dynamics. The goal of this study was to tailor the behavior of household cleaning robots to both appease indivdual users within the house and to synchronize well with household dynamics overall. We tested four different hierarchical policy shaping algorithms in an online study to determine what robot response would be viewed as socially appropriate by users.

3DS Max Animation

2020

Animation created and rendered in 3Ds Max using a Biped skeleton for character movement. All models for this animation were built in Inventor.

Trinity Firefighting

2019

In Winter 2019, I built the mechanical design aspects of a robot that could track and blow out flames for the Trinity Fire Fighting Robotics Competition with my teammate, Eric.

Pumpkin Lamp

2019

Dissassembled and CAD modeled the components of a DEIK wine opener with a team of four in Solidworks.

Deflection Finite Element Analysis

2019

I used Solidworks Finite Element Analysis and Castigliano’s Analysis to design a structure to deflect .5in in line with a specific criteria. The approximated model was then cut with a waterjet and tested using an Instron machine.

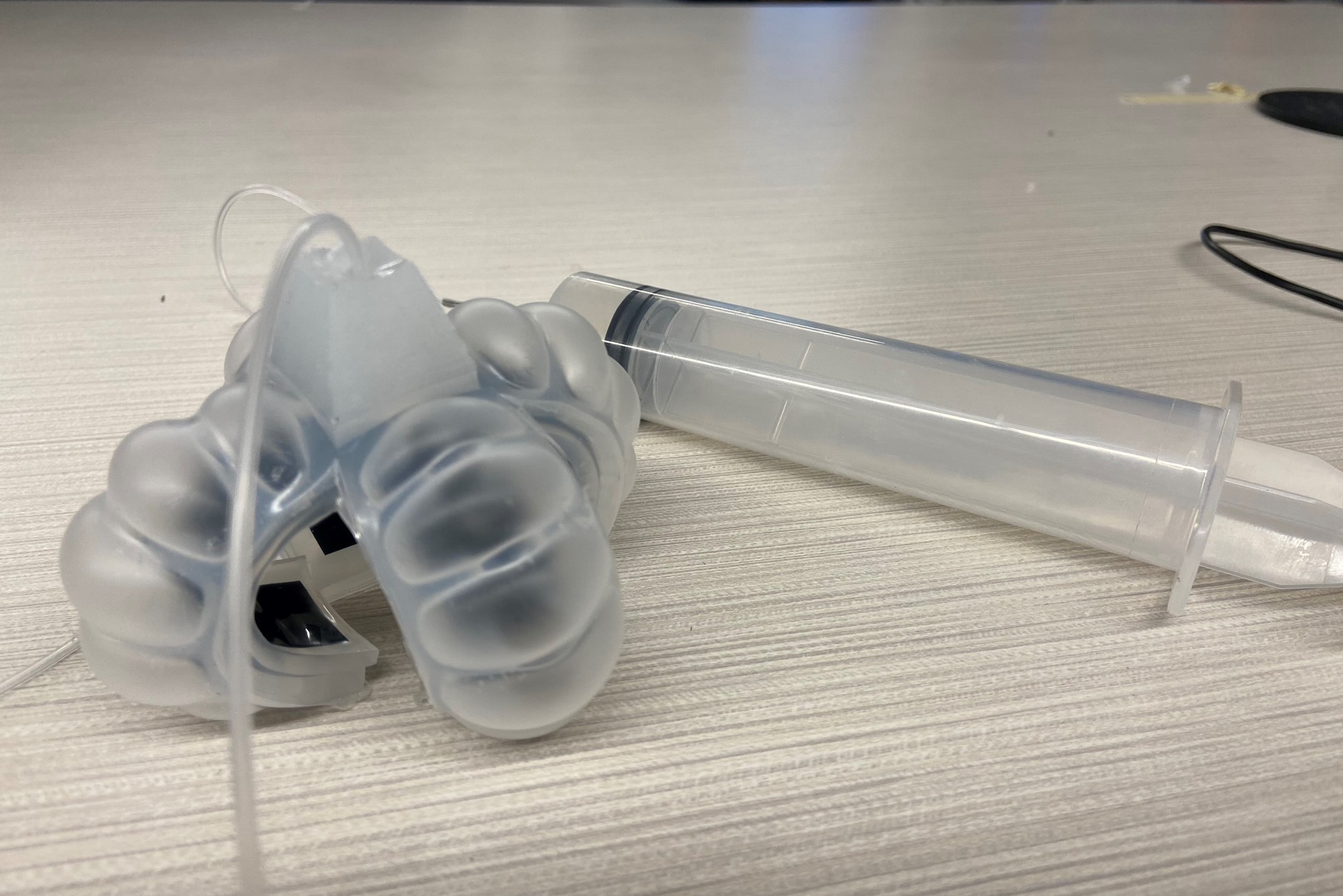

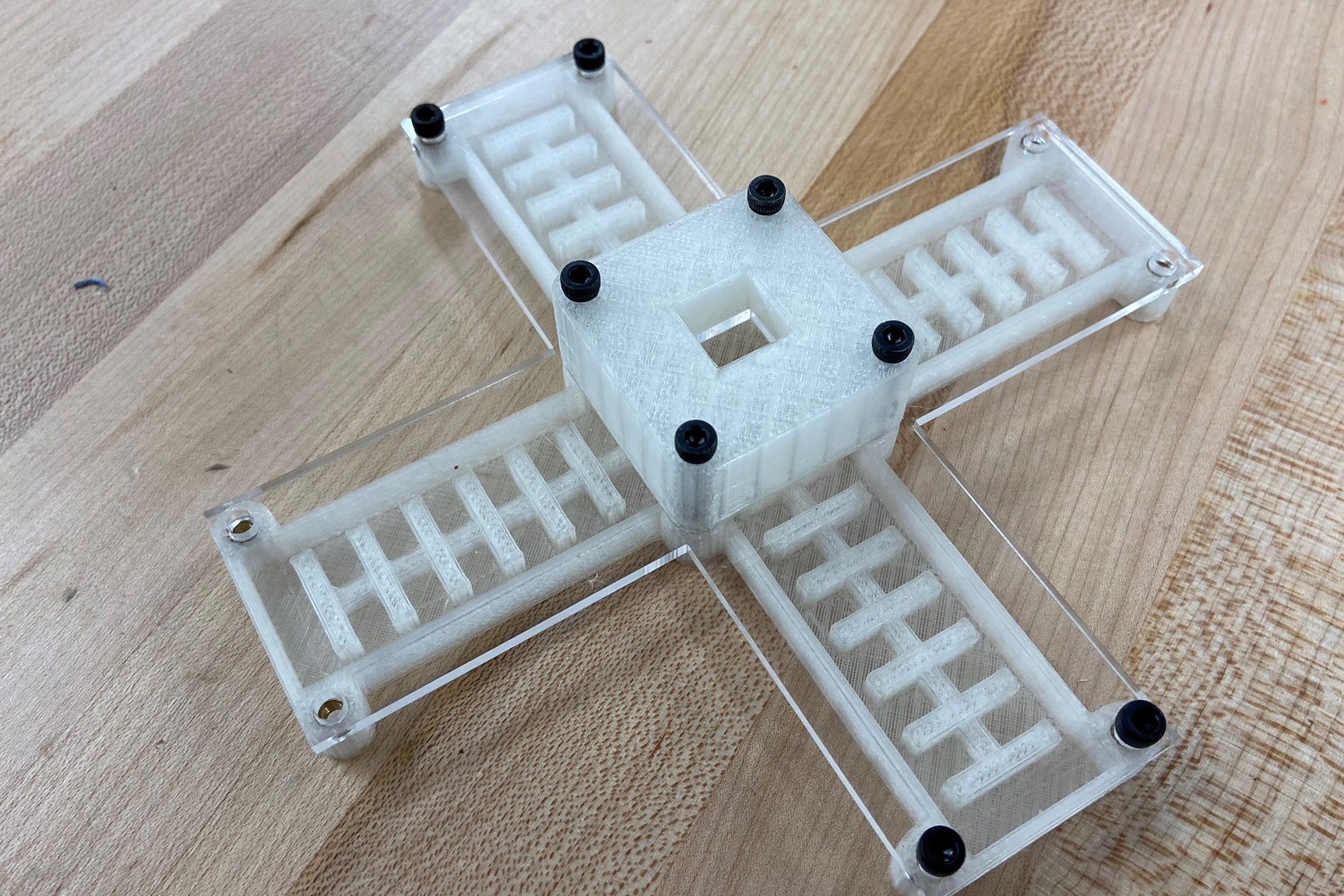

Soft Robotics Exosuit / Silicon 3D sPrinter

2019

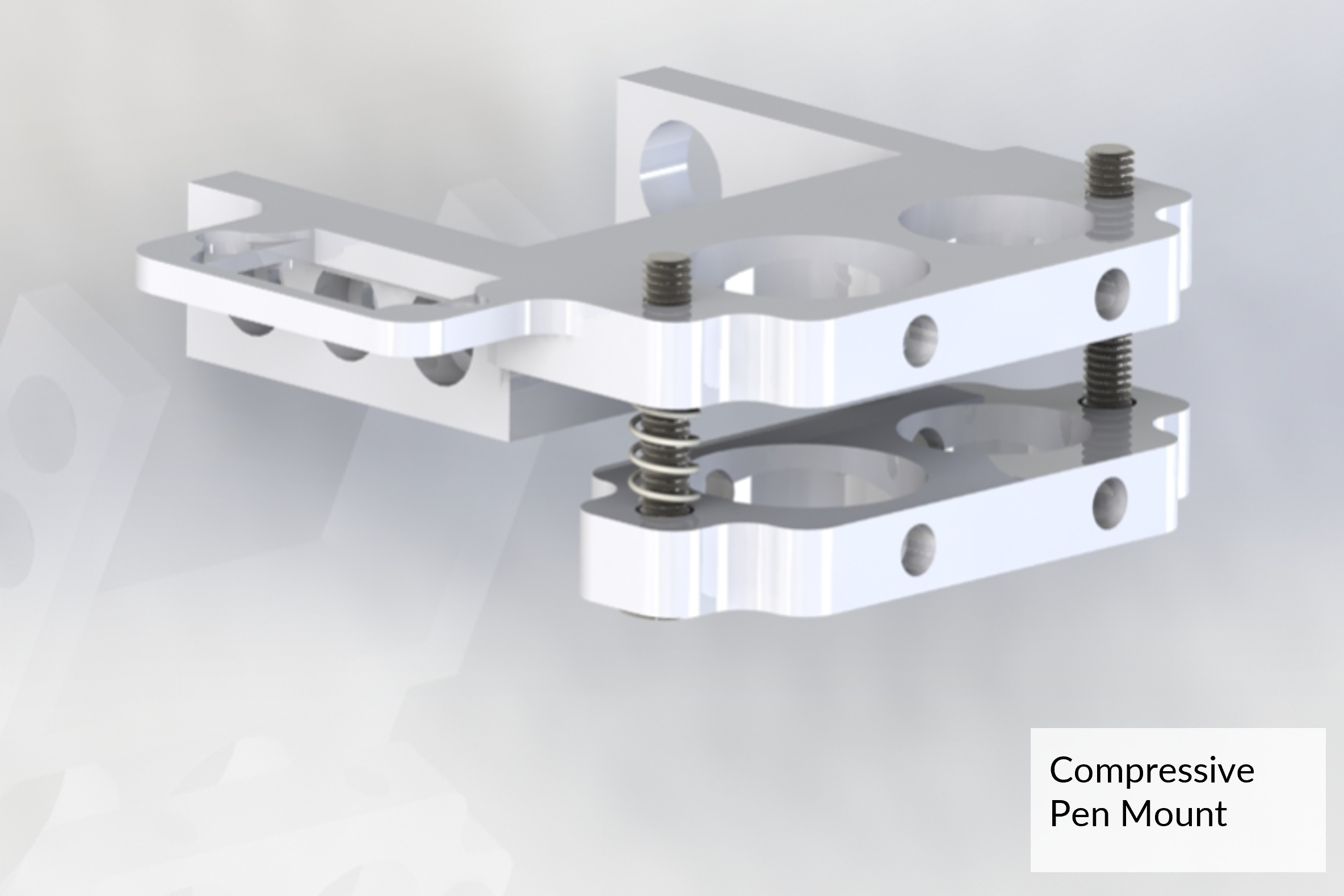

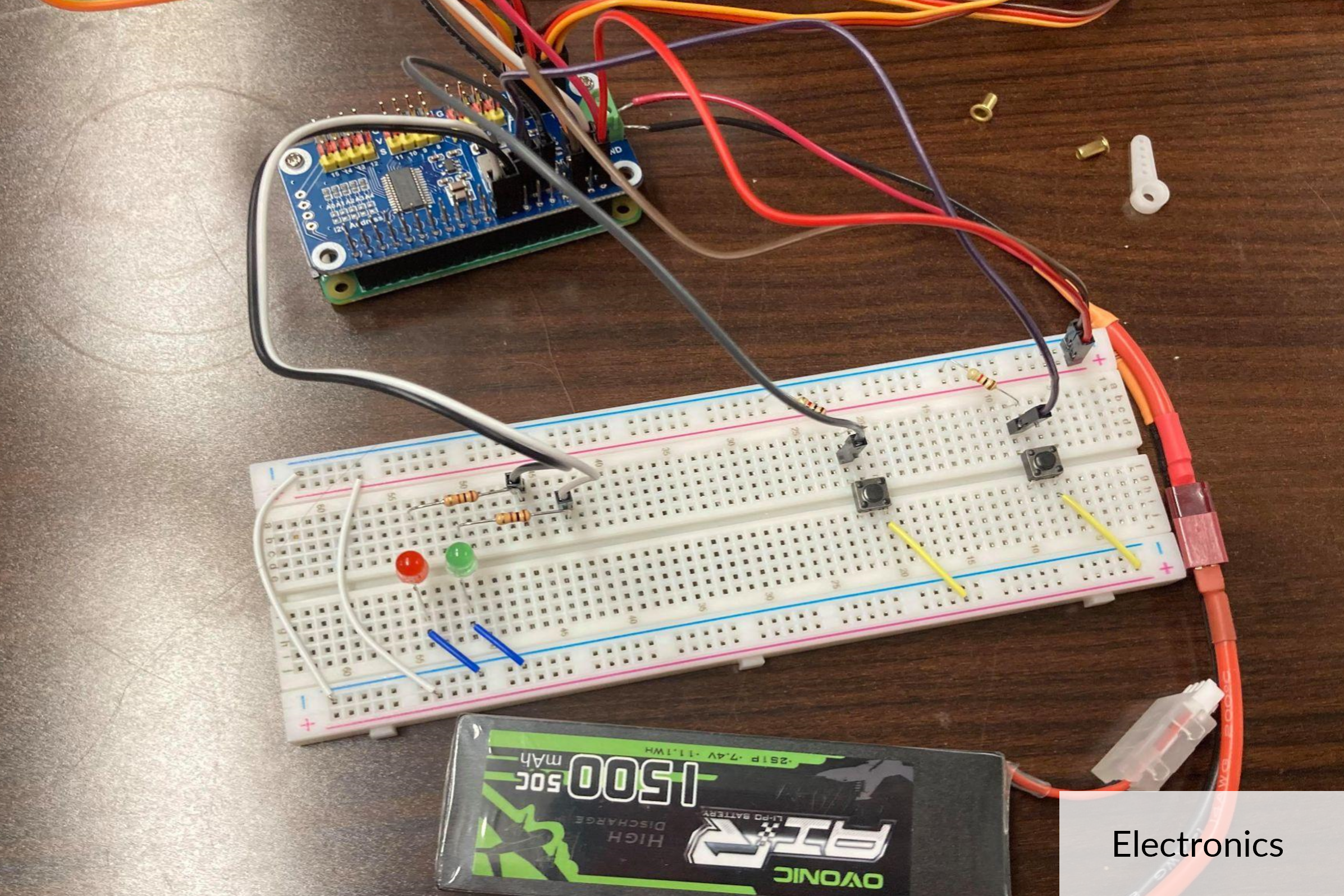

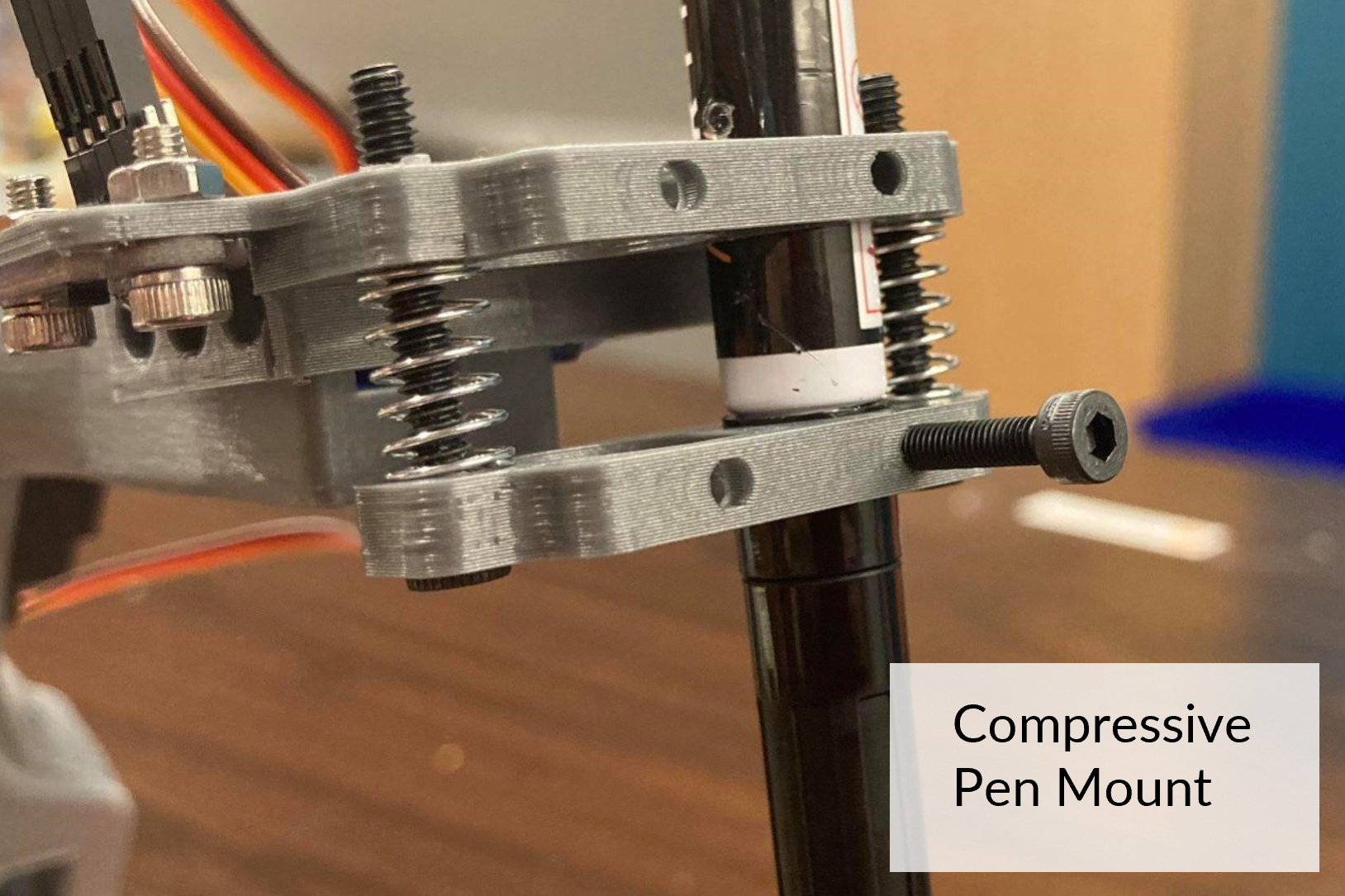

In 2019, I worked on soft robotic grippers and on redesigning a silicon 3D printer with the Tufts Soft Robotics Exosuit Research team. I modeled and fabricated several different designs for silicon actuators to allow better airflow for a soft robotic exosuit gripper using CAD, laser cutting, and silicon mold casting.I also improved the control board design and mapped electronics on fritzing. To extend the team’s silicon 3D printer design, I built a new attachment for the print extruder.

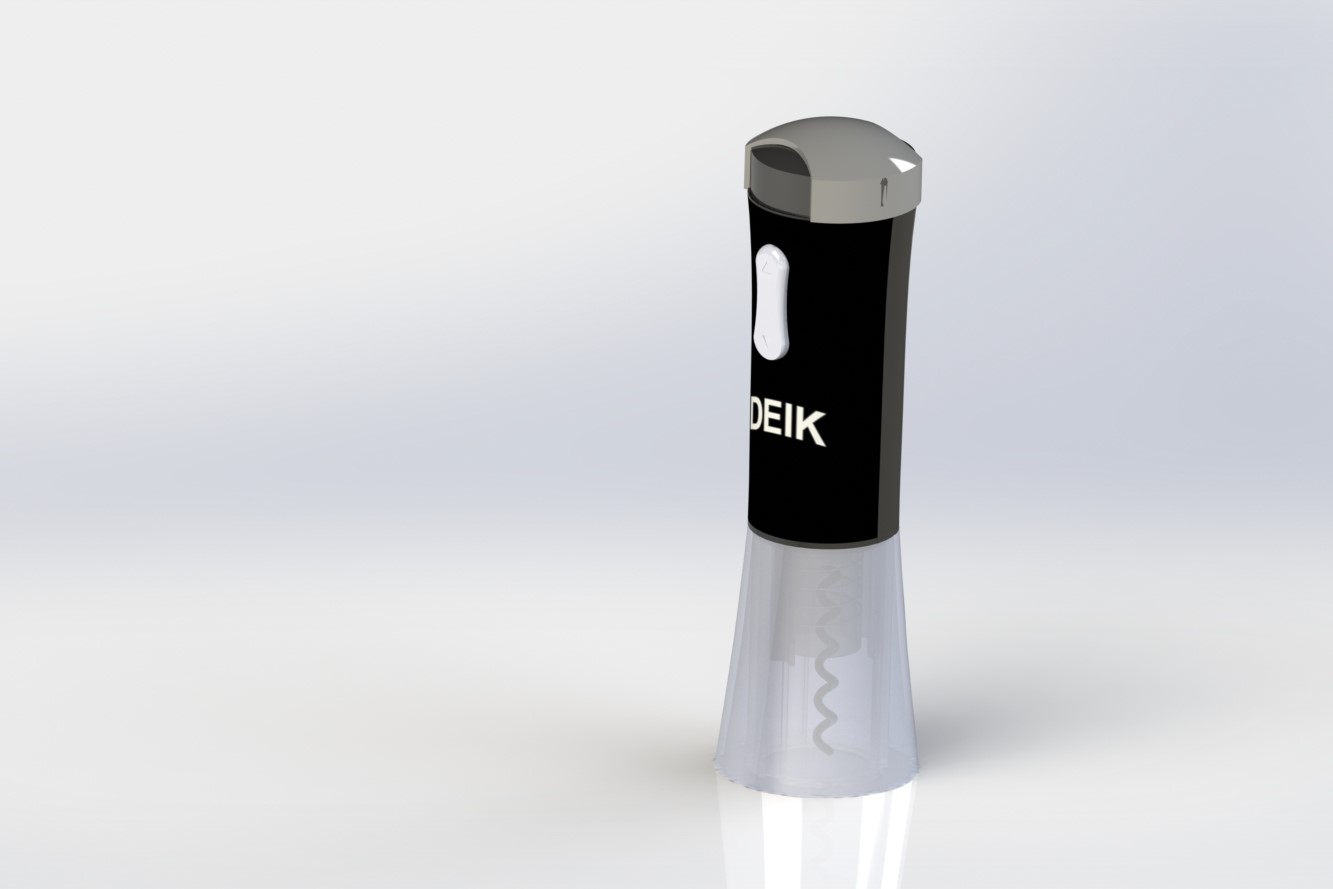

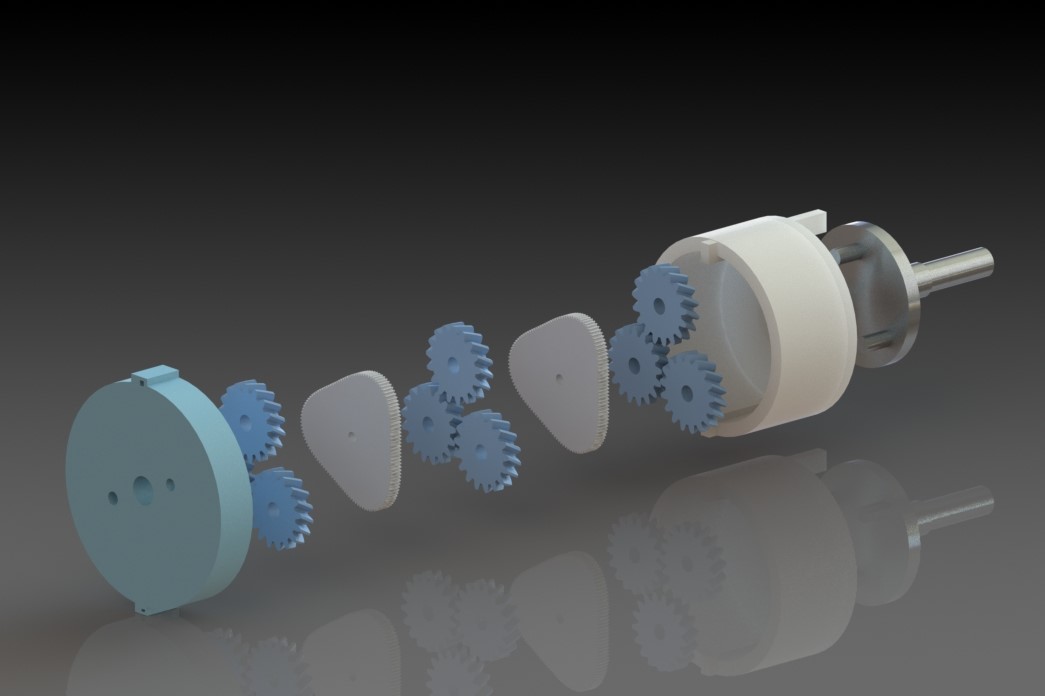

DEIK Electric Wine Opener Teardown

2019

Dissassembled and CAD modeled the components of a DEIK wine opener with a team of four in Solidworks.

CEEO Innovations

2019

Built website and documentation platform for CEEO Innovations website on github pages using Javascript, JQuery, HTML/CSS, Jekyll, markdown, and git. The goal of this platform was to make it easy for non-coding users to create website pages using a modified version of Markdown that I programmed to allow sections within a page to have modulated styles.

.png)

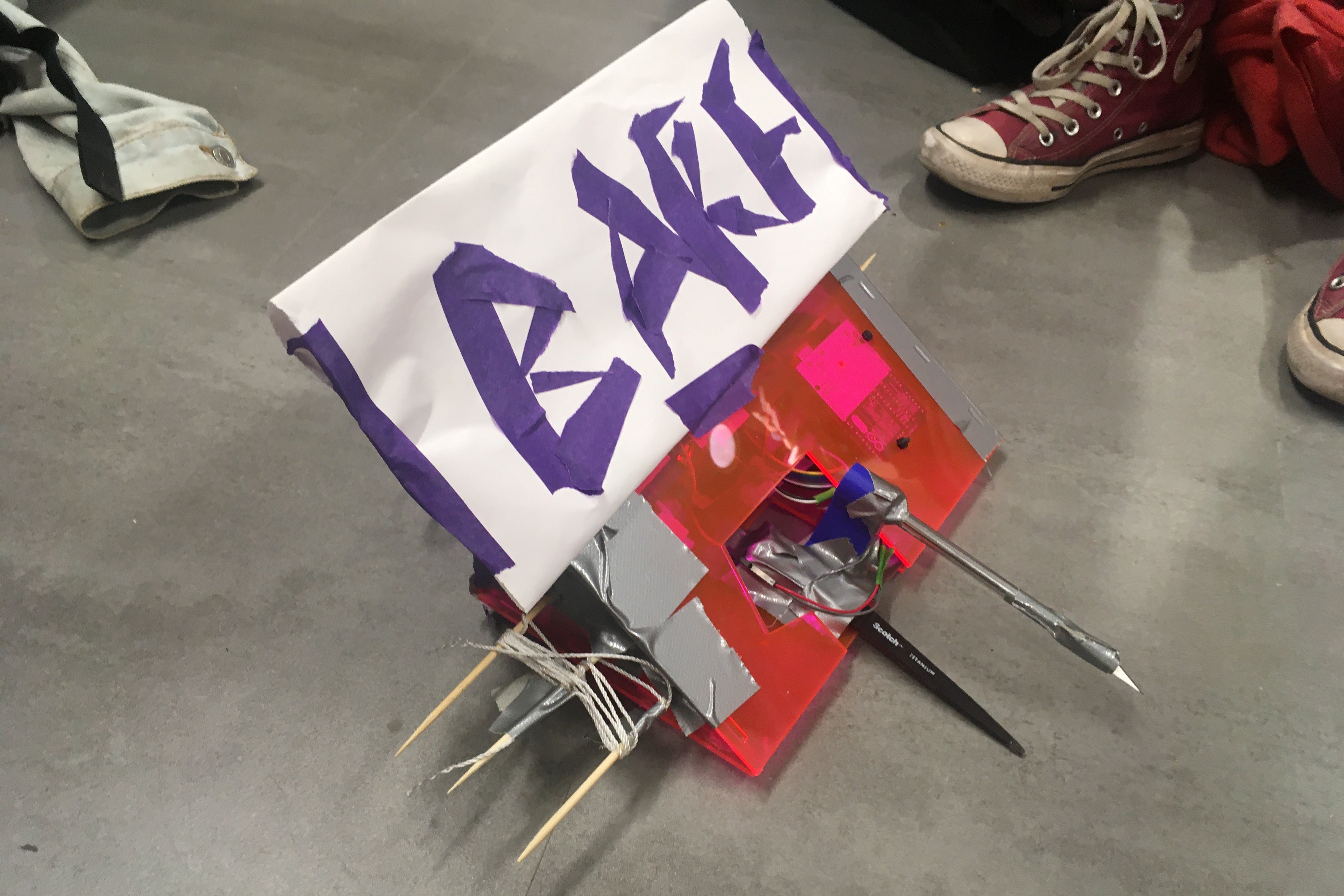

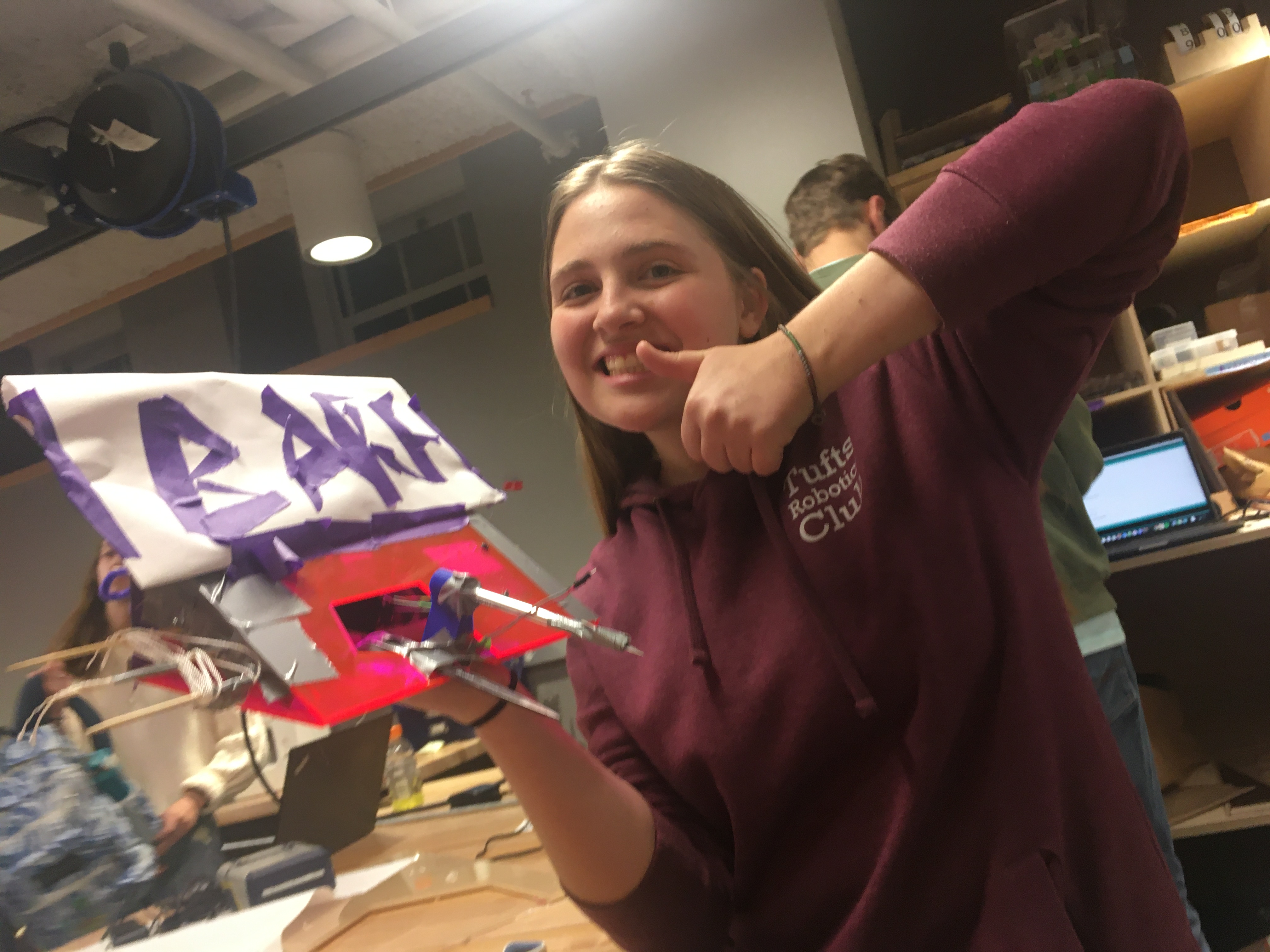

Battlebot

2019

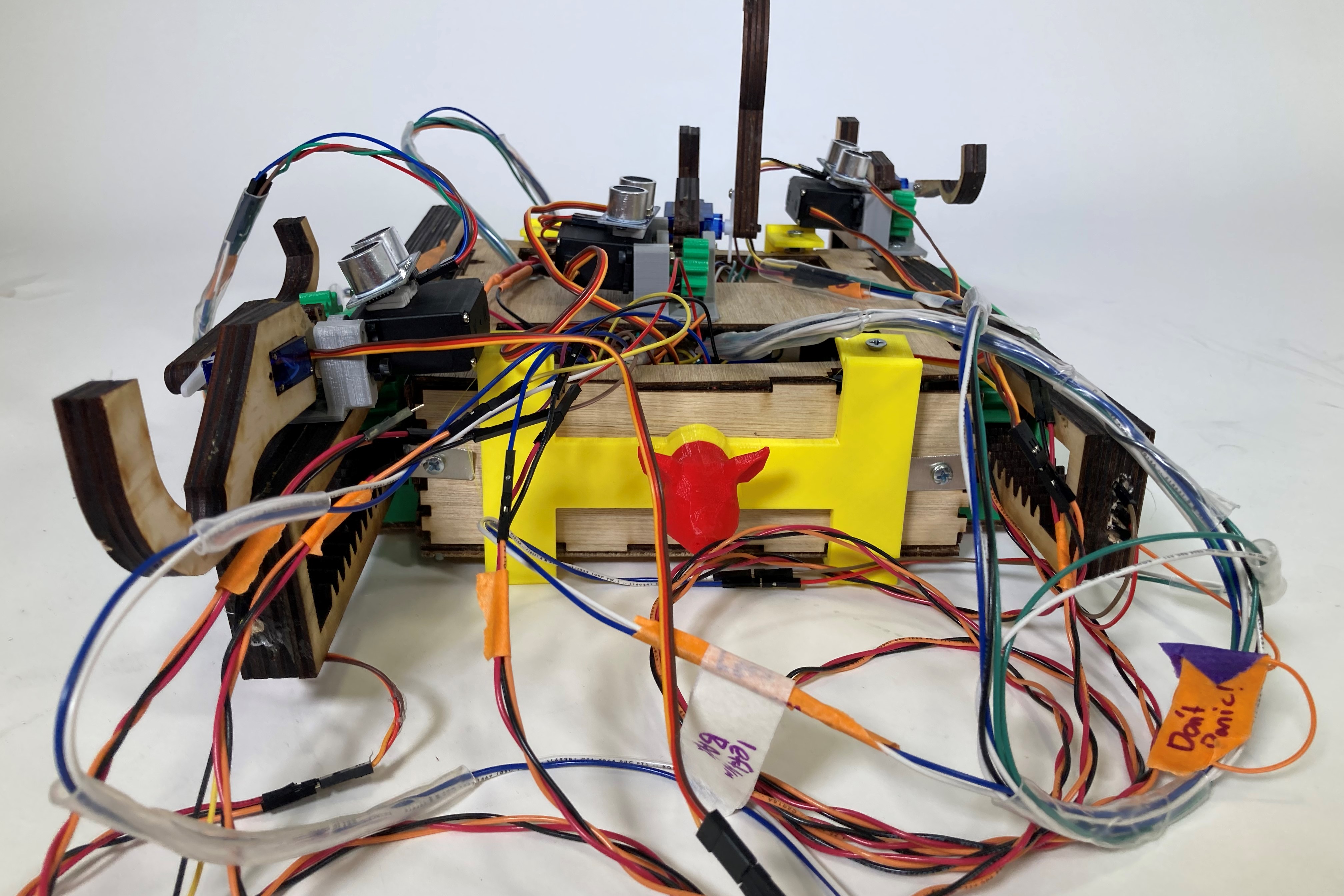

As a short project 2019 fall, I worked with a team of four to create a battlebot from scratch to fight robots built by other students at Tufts University. I CADed, 3D printed, laser cut, and assembled the mechanical aspects of this robot.

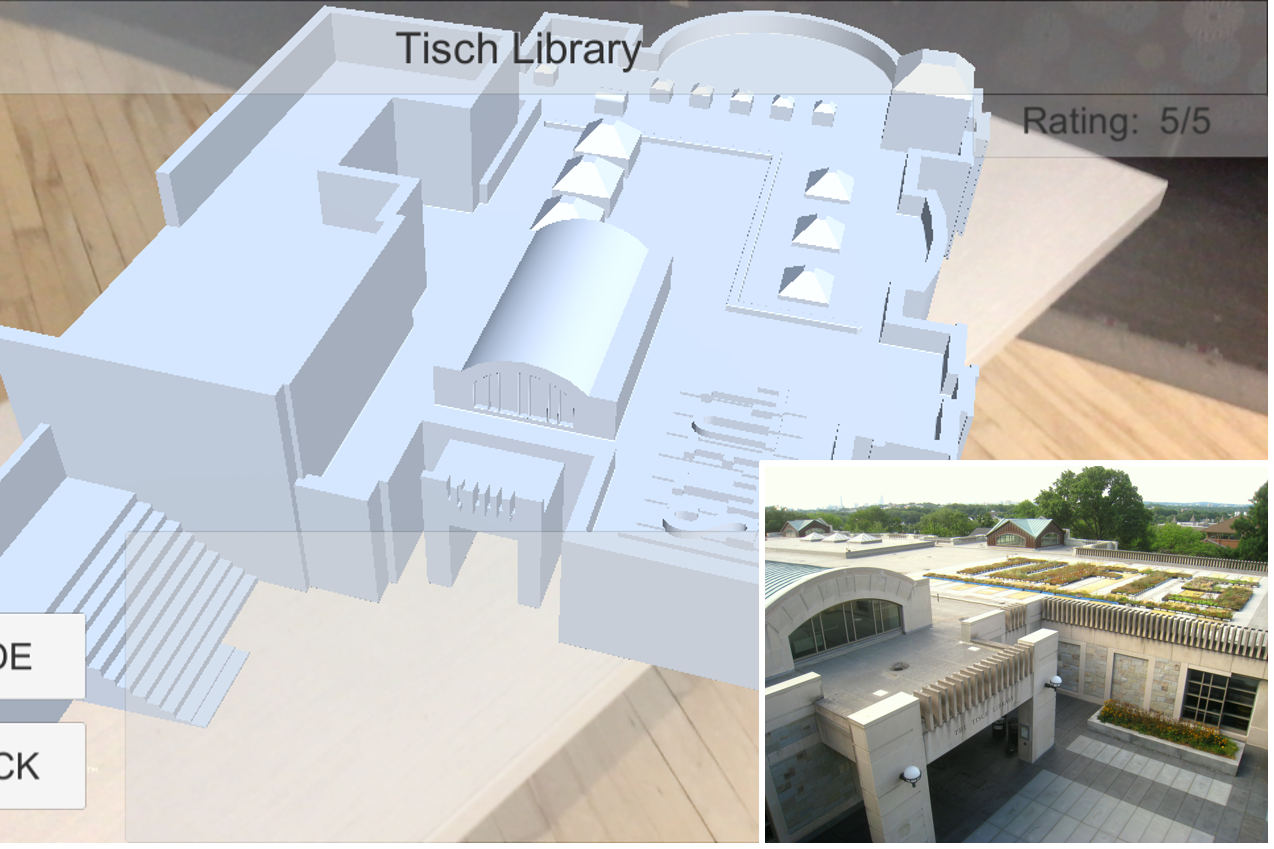

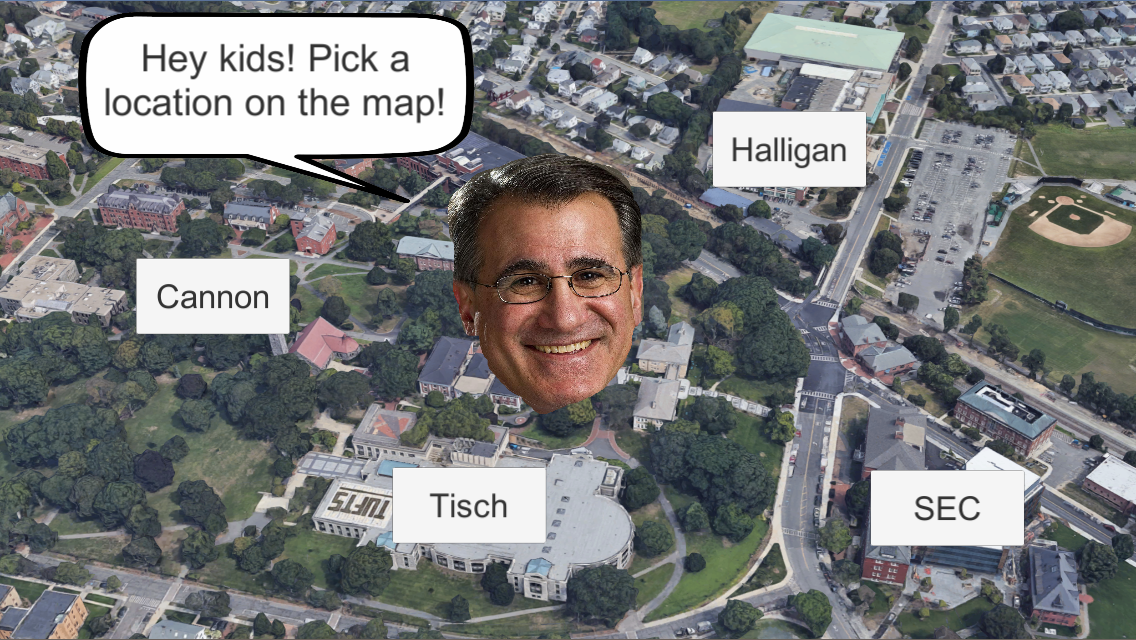

Tufts AR

2019

I built an Augmented Reality (AR) Unity app mapping the Tufts University campus over 24 hours at Tufts Polyhacks 2019 with a 5 person team. Users could leave reviews to appear alongside CAD building models in the app. I created 3D building models in Autodesk Fusion and built the website in HTML/CSS. This project won the Trip Advisor award for best use of Travel API.

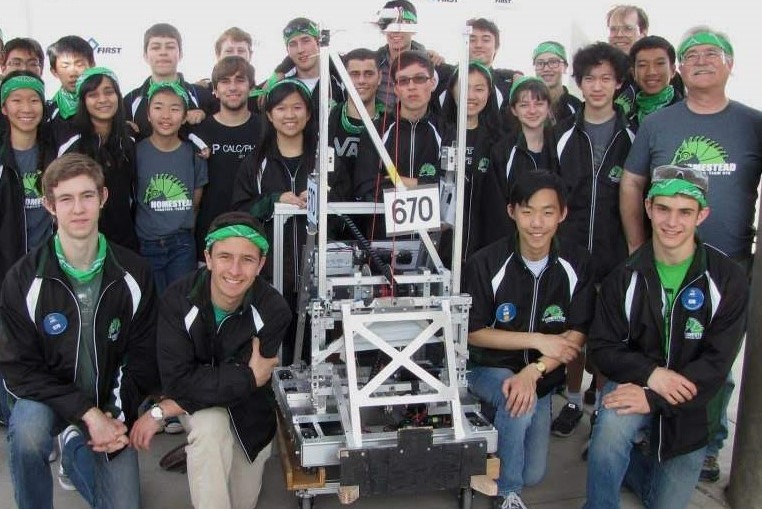

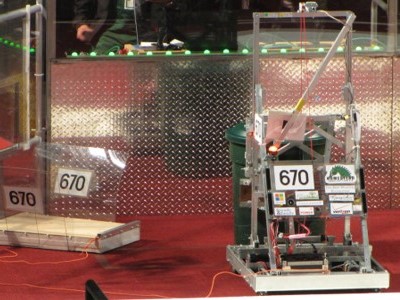

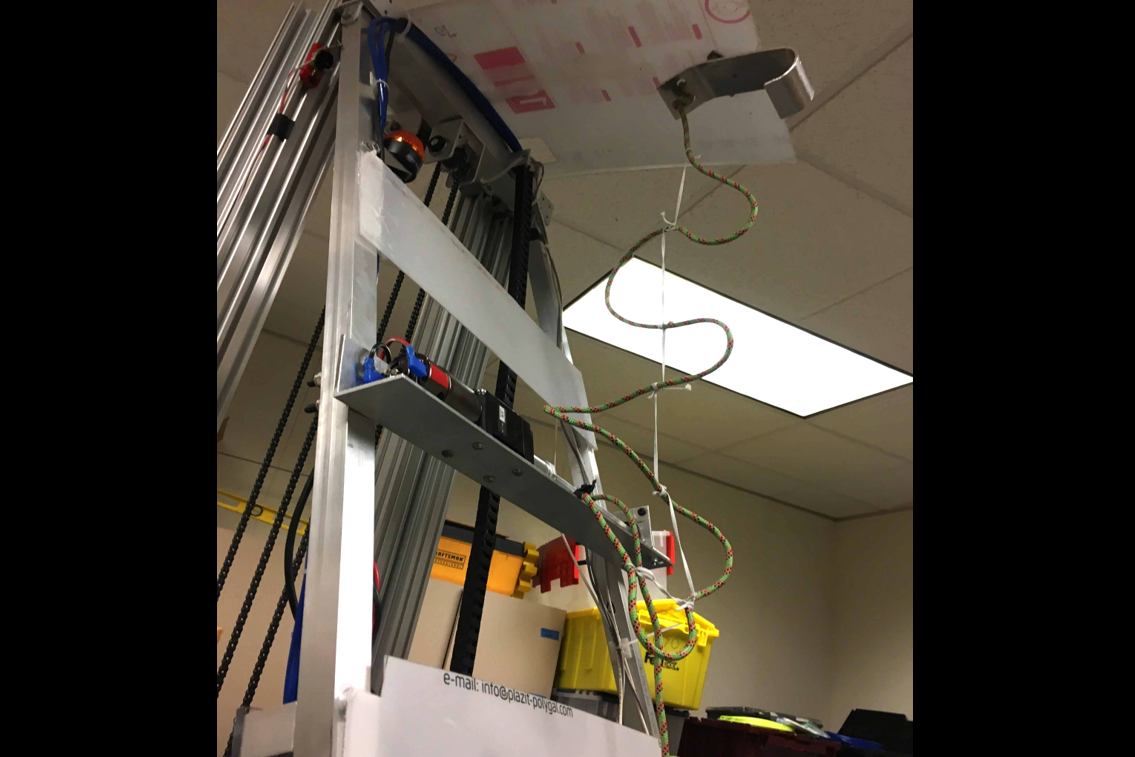

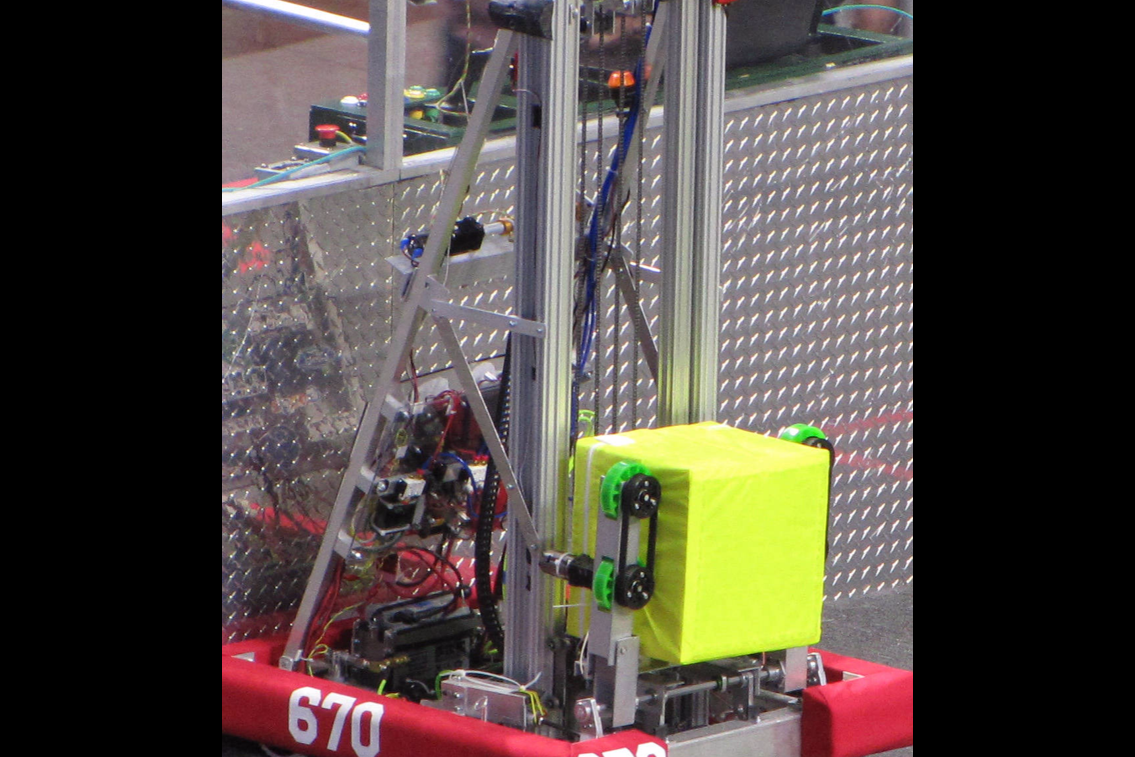

FRC Robot for “Power Up”

2018

This robot was built for the 2017 First Robotics Competition to stack latex covered cubes on a moving platform and climb alongside other robots on a rung. This robot placed 7th in the 2018 Silicon Valley Regional out of 60 teams, 3rd at CalGames 2018 out of 38 teams, and was a regional finalist at the 2018 Utah regional. I built the climbing mechanism for our robot and ran the Mechanical Design team with a partner.

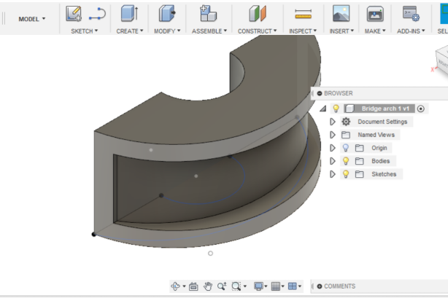

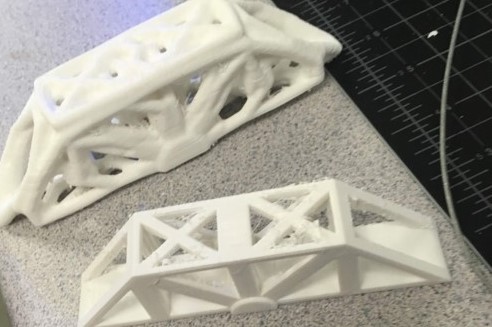

Generative Bridge

2018

In Fall 2018, I spent some time exploring the strength applications of generative design in truss and arched bridges using AutoDesk Fusion and MeshMixer.

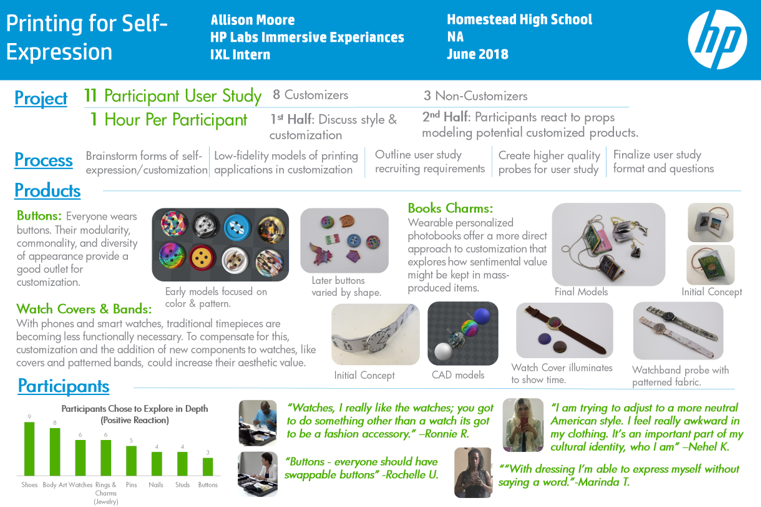

HP Labs IXL

2017

In 2017, I interned with the Immersive Experiences Lab (IXL) to study the applications of 3D printing and customization in clothing. Over the summer, I developed prototype customizable objects and conducted a qualitative study on user reactions. I also worked independently on a project exploring the idea of comfort/nonverbal communication through wearable technology.

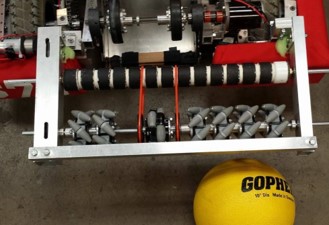

FRC Robot for “Stronghold”

2016

This robot was built for the 2016 First Robotics Competition to navigate an obstacle course while collecting and shooting dodgeballs. During this project, I led the fabrication of our robot with a partner. I learned about the implementing designs into physical models during this process.

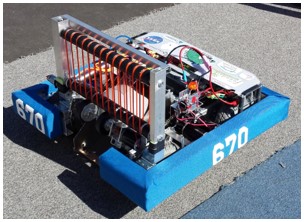

FRC Robot for “Recycle Rush”

2015

This robot was built with a team for the 2015 First Robotics Competition to lift and stack tote. I mostly worked on fabrication and it was my first robotics project!